Table of contents

How to test MCP servers effectively (6 best practices)

.png)

Model Context Protocol (MCP) servers are often poorly built.

Their tools, or the functionality they expose, may not have clear descriptions; their error messages may be ambiguous; their authentication mechanisms can vary across tools, and more.

These issues can lead your AI agents to underperform, whether it’s calling the wrong tools or it’s taking the incorrect steps when working with users on remediating issues.

To prevent many, if not most, issues, you can test an MCP server comprehensively before your AI agent uses it in production.

Here are a few tips to help you do just that.

Use sandbox data for every test

This best practice is the most intuitive.

Your agentic use cases for an MCP server likely include using sensitive data, such as personally identifiable information (PII) on customers’ employees. And if you haven’t tested the MCP server carefully before using real-world data, you’re putting this data at risk of leaking to unauthorized individuals—which could have long-lasting consequences on your business.

To that end, only use sandbox data when you’re testing an MCP server.

{{this-blog-only-cta}}

Set up a wide range of test scenarios

Since your AI agents will likely support a diverse set of use cases, you’ll want to define a broad range of expected behaviors.

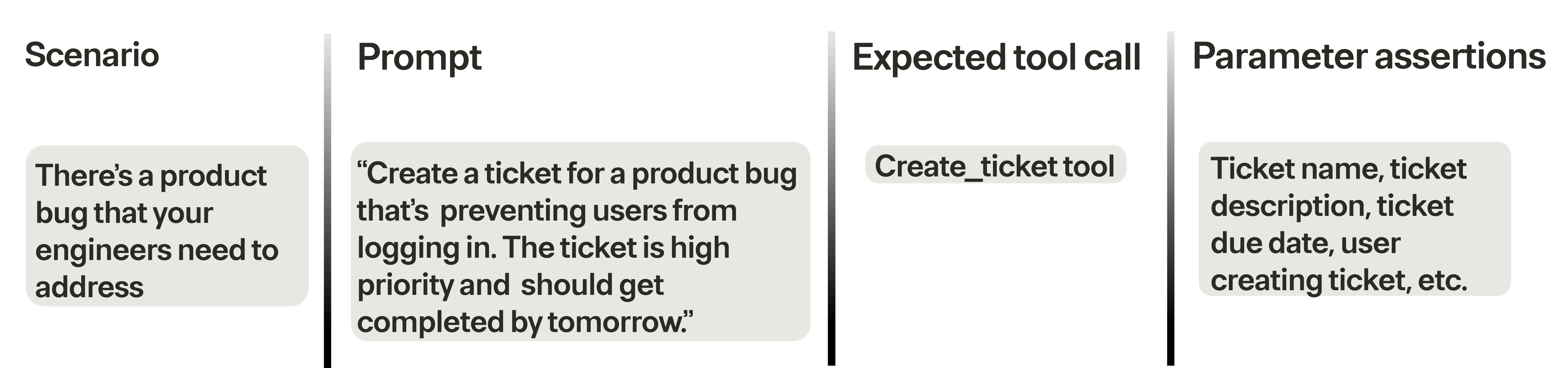

For each behavior, you should include the relevant prompt(s), the expected tool call(s), and the parameter assertions.

The table below lays out just one scenario.

The more time and effort you put into brainstorming different scenarios and outlining the expected behaviors, the better you can test the MCP server. So, it’s worth making this a comprehensive exercise that involves input from everyone who’s building to the MCP server and planning on using its tools.

Related: How to use MCP servers successfully

Evaluate the tools’ hit rates

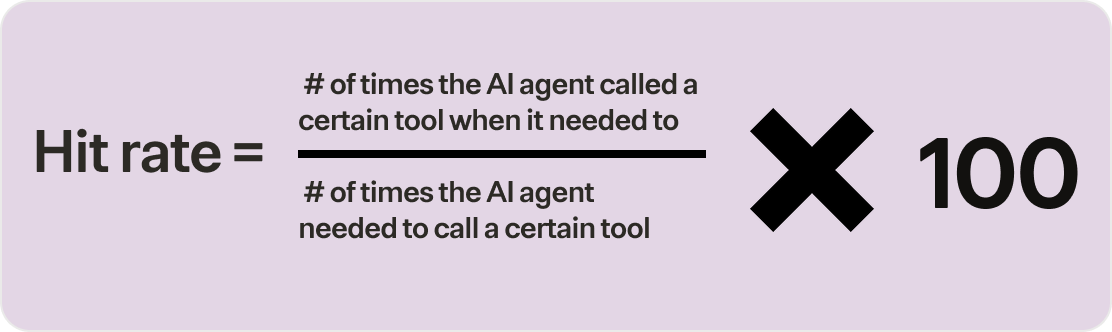

As you begin testing different scenarios, you should measure them against an increasingly important metric—a tool’s “hit rate”.

This metric refers to how often your AI agents make the appropriate tool calls.

An AI agent’s ability to both consistently determine whether to call a tool from a given prompt and call the correct one signals that the MCP server’s tools have comprehensive coverage, clear descriptions, and proper parameter schemas—all of which indicate the quality of the MCP server.

The ability to fine-tune an MCP server’s tool descriptions and schemas is also a strong indicator of quality, as it enables further improvements in a tool’s hit rate (but most out-of-the box MCP servers will, unfortunately, not provide this level of flexibility).

Assess the tools’ success rates

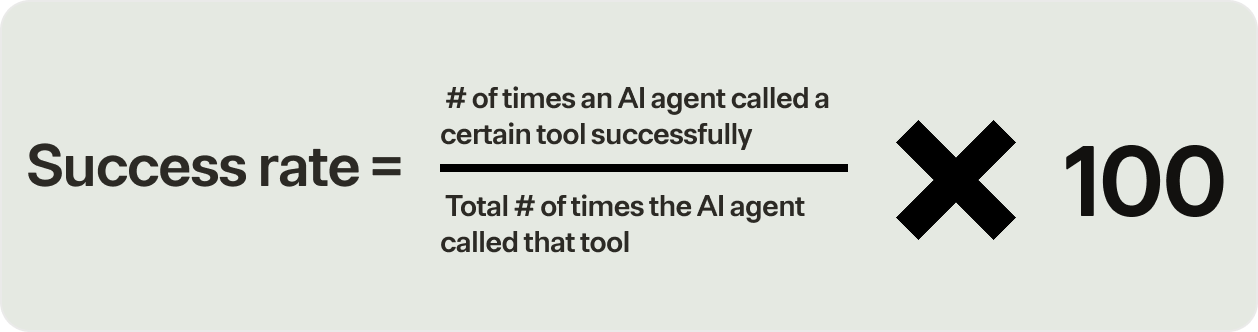

Calling the correct tool is just half the battle. The other half is calling that tool successfully.

This includes both the initial tool call and, if there’s errors, any follow-up attempts.

It’s important to measure this separately from a tool’s hit rate.

As mentioned earlier, a tool’s hit rate measures things like the tool coverage and a tool’s description quality. The success rate, on the other hand, shows how well a tool manages authentication, surfaces issues (e.g., reaching a rate limit), handles the parameters that get passed, and more.

In other words, by separating the two metrics, you can better hone in on the strengths and weaknesses of a given tool.

For instance, a tool with a high hit rate but a low success rate suggests that the MCP server provides clear descriptions and schemas but suffers from poor execution quality; but a high success rate and a low hit rate can mean that the MCP server’s tools aren’t comprehensive.

Related: The top challenges of using MCP servers

Track unnecessary tool calls

For any scenario you test, you’ll expect certain tool calls. But your AI agents may make additional ones that are not only unnecessary but also costly.

Here are just some of the issues of unneeded tool calls:

- The workflow experiences additional latency, and for time-sensitive processes, this can meaningfully hurt its performance

- You may face additional costs over time

- It can break your customer's trust with your product if they notice your AI agent making unnecessary tool calls to achieve a given outcome

- The process of troubleshooting a workflow issue becomes more complex as there are more variables that need to be isolated and reviewed

Fortunately, if you explicitly lay out all of the expected tool calls across your test scenarios (as highlighted in our second best practice), this issue will be easy to identify and address over time.

Test your agents’ tool calls comprehensively with Merge Agent Handler

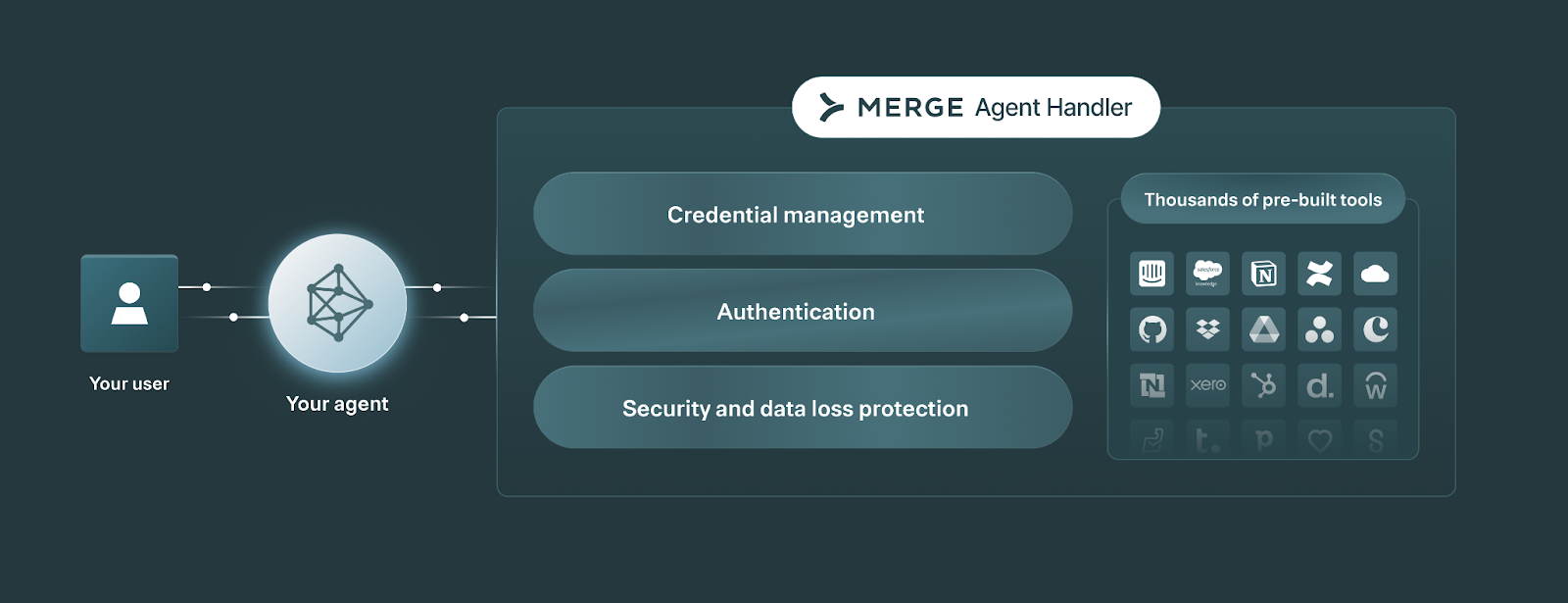

Merge Agent Handler is a single platform that lets you connect your AI agents to any third-party connector, and manage and monitor all tool interactions.

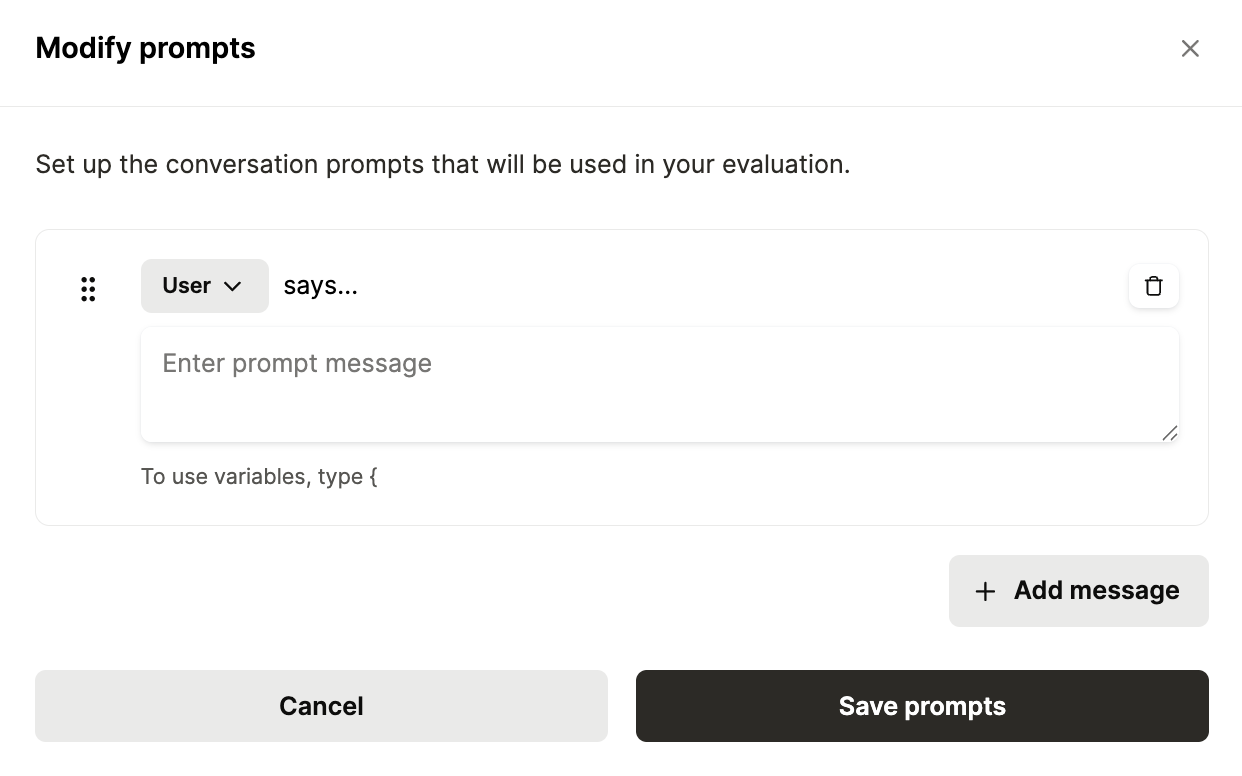

It includes an Evaluation Suite that lets you validate tools by running representative prompts to confirm they behave as expected, including auth paths and edge cases.

You can start using the Evaluation Suite—and Merge Agent Handler more broadly—by creating a free account!

.jpg)

.png)

.png)