Integrations for AI agents: what you need to know

As you look to build AI agents in your product, you’ll inevitably need to support integrations that can access and interact with your customers’ data.

To help you navigate this successfully, we’ll break down examples of AI agents that use integrations and your options for building and maintaining any integration.

But first, let’s align on why AI agents require integrations.

{{this-blog-only-cta}}

How integrations support AI agents

It comes down to a few key reasons.

Powers RAG pipelines

Integrations form the foundation of retrieval-augmented generation (RAG) pipelines, enabling AI agents to retrieve the context needed to effectively take actions on behalf of each user.

For example, say you offer a headcount analysis solution and support an AI agent to help users get more value from your platform.

Using RAG, your AI agent can take a question (e.g., “What are the top drivers behind the recent surge in headcount costs?”) and use the customer’s integrated employee and payroll data to provide an answer—along with recommended next steps.

Related: How AI agents can use APIs

Offers raw and normalized customer data

Each type of data can be valuable.

Normalized data, or data that’s transformed to fit a predefined data model, can remove sensitive information, unnecessary details, and duplicate data.

This clean, consistent data enables embedding algorithms to generate accurate vector representations before storing them in a vector database. This, in turn, lets your AI agent retrieve the most relevant data across supported workflows consistently.

Raw data, or data that isn’t altered from the customer’s application, helps your AI agent support unique workflows for individual customers.

For example, imagine you offer a product intelligence platform with an AI agent that listens to customer conversations. Based on those conversations, the agent generates recurring reports highlighting key themes.

Now say that a specific customer has a custom CRM object they’d like included and populated in these reports—”Use case(s).” Based on the use case(s) that keeps coming up, this customer’s product team could better prioritize which features to build over time.

Related: The best agent integration platforms in 2026

Supports time-sensitive processes

Your AI agents may need to support time-sensitive workflows, like routing leads, de-provisioning a departing employee’s applications, creating and assigning tickets for a product bug, and more.

Integrations can help facilitate these use cases, among countless others, by supporting real or near real-time syncs with your customers’ systems.

Examples of AI agent integrations

To better understand how AI agents can use integrations, let’s break down a few real-world examples.

Proposal Writer uses file storage and CRM integrations to generate personalized offers

Ema, which lets you build and manage AI agents across teams, offers a Proposal Writer agent that takes customers’ prompts (e.g., write a proposal for a customer in X industry that needs Y plan) and uses integrated data, among other context, to generate compelling proposals within seconds.

It does this by leveraging similar proposals in the customer’s file storage system and by evaluating the notes, along with other helpful information, within the prospect’s opportunity page in the CRM.

https://www.merge.dev/blog/rag-vs-ai-agent?blog-related=image

Juicebox Agent uses ATS integrations to help surface high-fit candidates

Juicebox, which lets recruiters find and bring in talent, offers an agent that can surface thousands of high-fit candidates in seconds.

Here’s how it works: A user would click on a role they want candidates for (existing open roles are surfaced in Juicebox via the integrated applicant tracking system).

The agent then uses the integrated job description associated with the selected role, along with additional context, to kickoff its search.

Nova uses ATS and HRIS integrations to help HR teams manage countless tasks

Peoplelogic, the AI for HR platform, offers Nova, a suite of AI agents that can help HR teams perform specific tasks in Slack.

To help their agents execute tasks on behalf of users successfully, they use integrated candidate and employee data.

For example, a people ops leader can ask a Nova agent (“Shruti”) to remind every interviewer about their upcoming interviews that day on Slack. The people ops leader can also clarify when the messages should be sent and what context can be included.

Shruti can then determine which agent should take on the task (it would be “Noah”). And the assigned agent can use the integrated candidate data to send the messages on time, along with the appropriate context from their applications.

https://www.merge.dev/blog/how-to-build-ai-chatbot?blog-related=image

Best practices for building integrations with AI agents

Before you begin investing resources on integration development, it’s worth considering the following.

Navigate the build versus buy decision carefully

In most cases, tasking your own engineers with implementing these integrations isn’t worth it. It prevents them from developing your AI agents—and working on other core projects—which is likely what they’re uniquely qualified to tackle and what’ll differentiate your AI product long-term.

That said, if your agents need to support highly-custom integration use cases and/or you have resources you can afford to allocate towards integration projects, it can be worth implementing them in-house.

The following section—which breaks down all of your options for building and maintaining integrations for AI agents—can help you decide on the best approach.

Implement access control lists (ACLs) across your integrations

The last thing you want is your AI agents performing actions on behalf of users in integrated applications without respecting the users’ permission levels.

For example, if one of your users requests information about an integrated file they don’t have access to, your AI agent should never reveal any details from that file—regardless of the user’s prompt.

To prevent your AI agents from inadvertently exposing sensitive data, it’s essential to implement ACLs across all integrations you develop.

Leverage webhooks to help your AI agents respond to events in real time

Certain use cases require an immediate response from your AI agent.

For example, if one of your customer’s prospects becomes a marketing qualified lead, your AI agent should perform something like the following in real time:

1. Use integrated CRM data to identify the assigned sales rep.

2. Analyze the account’s activity history—stored in the marketing automation platform and/or CRM—to determine the best follow-up steps and messaging for the rep.

3. Notify the rep via an app like Slack with this information to enable them to follow up quickly and effectively.

To facilitate these time-sensitive, agentic workflows, implement webhooks that send notifications to your AI agents when specific events occur—such as a prospect in one of your customer’s integrated apps reaching marketing qualified lead status.

Challenges of building integrations with AI agents

No matter how many best practices you follow, implementing integrations with any AI agents can still prove difficult. Here’s why:

- Hallucinations: Even when they’re grounded in accurate, relevant information via RAG pipelines, AI agents can still generate incorrect outputs. And if the output is inaccurate even by a miniscule amount, it can lead your customers and/or colleagues to make suboptimal decisions

- Security risks: Malicious actors can engage in unforeseen activities with your AI agents that expose sensitive customer data. For instance, if you’re integrating your AI agent with MCP servers, well-executed prompt injection attacks can lead to sensitive data getting exposed

Note: This was recently the case with GitHub. The developer platform’s MCP server experienced a prompt injection attack that led to data from private repositories getting leaked.

- Scaling: As you add more agents, managing them becomes increasingly complex and time-consuming for your developers. Tracking which agents are being added, which systems they need to integrate with, and the specific types of data they should access is already difficult—successfully enforcing those rules over time is even harder

- Error handling: Like any other integration, your integrations with AI agents will inevitably break and/or experience degradations in performance. To enable your team to identify when this happens, diagnose the problem, pinpoint the best solution, and implement the solution requires building comprehensive agent monitoring infrastructure that can take your engineers months

- Unclear data schema: Your customers use an overlapping set of data across applications, like prospect data in a CRM system or employee data in HRIS solution. However, the data within your customers’ instances of these softwares has varying schemas, many of which can be difficult for an LLM to interpret correctly

For example, the field “WorkerStatus” in a customer’s HRIS software could have several interpretations (such as employment type or whether an employee is still active). This can lead the AI agent to use the wrong interpretation and take a problematic set of follow-up actions (e.g., offboarding an employee in the customer’s HRIS software).

Depending on the solution you adopt to build and maintain integrations with AI agents, you may be able to prevent many of the issues described above. To that end, we’ll review each approach carefully.

Related: The relationship between AI agents and MCP

Integration options for AI agents

Here are your options for building integrations for customer-facing AI agents.

Native builds

This simply involves your developers building and maintaining the integrations themselves.

Pros

- You have full control over which integrations get prioritized and how they should be built

- You’re entrusting your own development team (who you know and trust) to build integrations—not a separate team you’re less familiar with

- You don’t have to worry about the 3rd-party integration provider going out of business, moving away from certain integrations, or doing anything else that would directly impact your product and your AI agents

Cons

- Building each integration is incredibly complex and resource intensive. Since your AI agent likely needs to access dozens of systems, native integrations can be the wrong approach

- Building and maintaining product integrations is often high pressure and unpleasant. For instance, when integrations break, your CS and leadership teams will know and will ask your devs to drop what they're working on to resolve it as soon as possible. This stressful and constant distraction can lead to burnout and turnover

- Implementing integration observability tooling, such as issue detection notifications in an app like Datadog, can also take your engineers several weeks, if not months

For example, Will Decker, the Head of Engineering at BrightHire, an interview intelligence platform, shared the insight below in our case study:

“Building our own integration observability tooling to manage integrations would take our team at least 3 months.”

Embedded iPaaS solution

An embedded integration platform as a service (iPaaS) lets you build customer-facing integrations and automations through a workflow builder interface.

Pros

- Helps accelerate integration and automate development by providing pre-built connectors and automation templates

- Many providers also offer complementary solutions, like an embeddable marketplace for your app

- Lets you choose between implementing all the integrations and automations yourself and allowing customers to build and maintain the integrations (or a combination of both)

Cons

- Forces you to become familiar with their UX and implement each individual integration, which can prove time and resource intensive for your team

- Fails to provide robust integration management capabilities, like diagnosing specific integration issues

- Don’t normalize data—you’ll have to do this work yourself

Model context protocol

Anthropic’s Model Context Protocol (MCP) lets AI agents interact with customers’ data via tools, or specific functionality and data exposed in an MCP server.

Pros

- Lets AI agents act autonomously, as they can decide which tools to use based on users’ prompts

- Most SaaS solutions have (or will) spun up MCP servers, making this approach usable for nearly any use case

- Relatively easy to implement, as it offers a single, standard protocol for LLMs and SaaS providers to follow

Cons

- MCP providers can be easily manipulated into sharing sensitive information with unauthorized users (e.g., tokens stored in an MCP server)

- Many fraudulent MCP servers (“Shadow servers”) are cropping up, and it can be hard to differentiate them from legitimate ones

- The protocol is still in its infancy. It can change in negative ways or receive less support from data providers over time, making it unsuitable for your AI agents

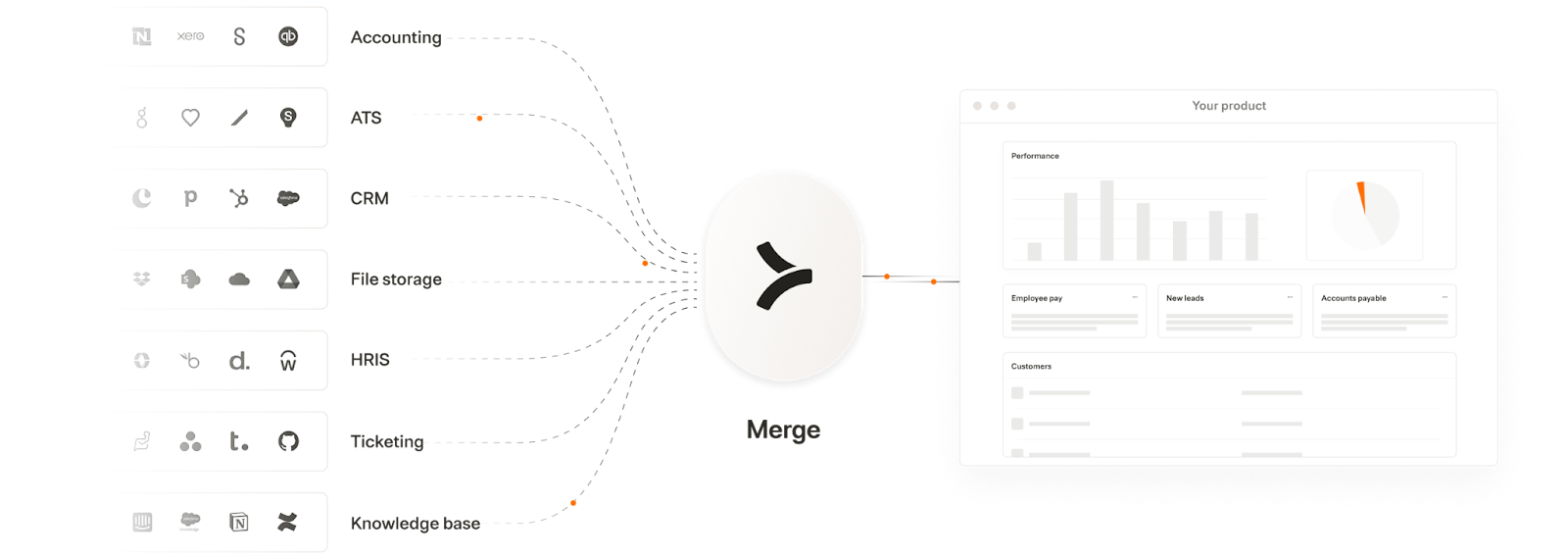

Unified API platform

A unified API solution lets you add hundreds of cross-category integrations through a single integration build.

Pros

- Lets you support all the integrations your AI agent needs, quickly and easily

- Some providers (like Merge) normalize all of the integrated data before syncing it with your product

- Offer a seamless process for authenticating with an application (typically via a UI component that’s embedded in your product)

Cons

- Most providers support limited types of data and/or integrations, making them a bad long-term fit

- Some providers are relatively new (founded within the last two years), and their long-term viability is questionable

- A few providers offer insecure integration methods when providers don’t support the relevant endpoints (e.g., “Assisted Integrations”)

Given all the integration methods to choose from, it can be hard to pinpoint the best option for your AI agent’s use cases (and, by extension, the best AI integration platform).

You can follow the principle below as a general rule of thumb:

If your AI agent’s use case involves dynamic decision-making, opt for MCP; if the use case is static (i.e., follows a predefined workflow), use a unified API solution.

So, going back to our examples, Juicebox can use the unified API approach since their agent follows the same process every time when using integrated ATS data. On the other hand, Peoplelogic may want to leverage MCP because their agents’ workflows for executing tasks are less defined.

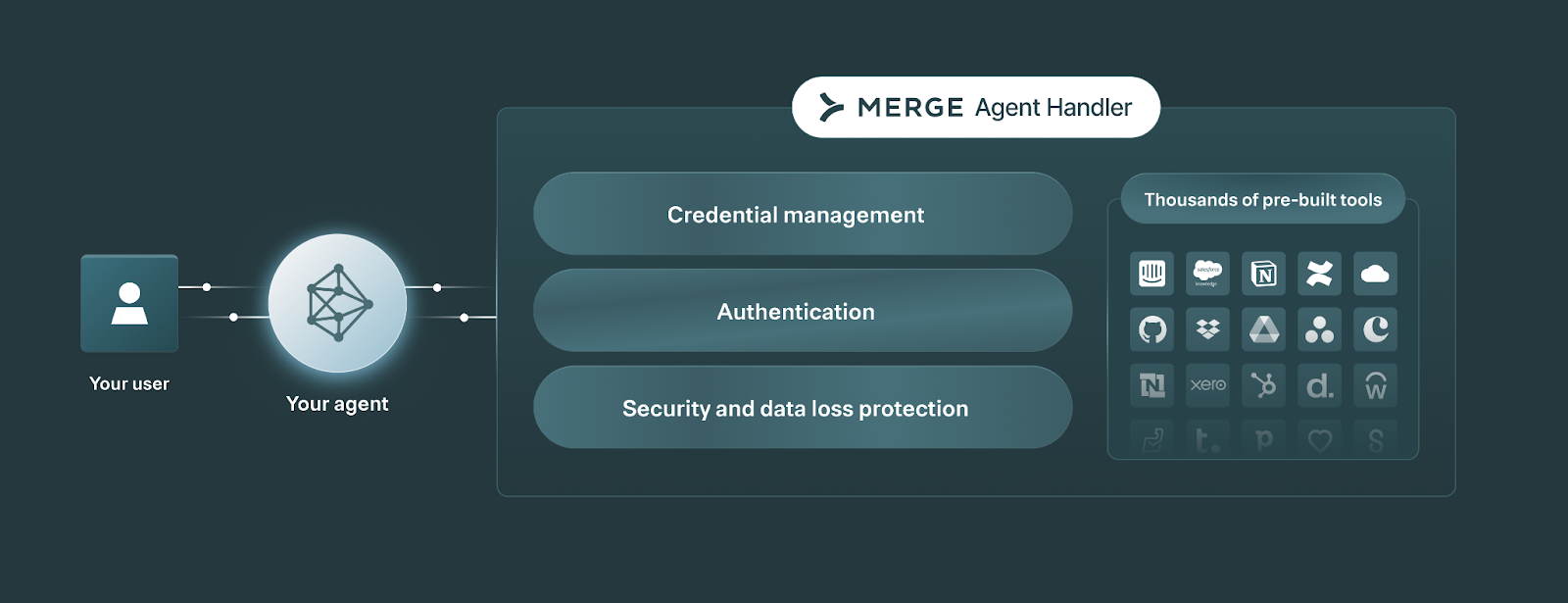

Access data securely via MCP or a unified API through Merge

Merge lets you add hundreds of integrations to your AI products and AI agents through two products: Merge Unified and Merge Agent Handler.

Merge Unified enables you to integrate your product with hundreds of 3rd-party applications through a Unified API. The integrated data is also normalized automatically—enabling your product to support reliable RAG pipelines.

Merge Agent Handler lets you integrate any of your AI agents to thousands of tools, as well as monitor and manage any AI agent.

Learn how Merge can support your product and agentic integration needs by scheduling a demo with an integration expert.

.png)