6 challenges of using the Model Context Protocol (MCP)

.jpg)

MCP has quickly become a widely-adopted protocol for connecting AI agents with 3rd-party applications, but using it effectively can prove difficult.

We’ll break down some of the top issues to look out for based on our own experience in testing numerous MCP servers and building our own.

Incomplete or ambiguous tool descriptions cause incorrect tool calls

Many MCP servers were rushed to market because of internal and external pressures—whether they came from the C-suite, prospects, competitors, and so on.

This has lead many MCP servers to have vague or incomplete tool descriptions.

Your AI agents will, in turn, struggle to determine the best tools to call based on a user’s input. And in many cases, your AI agents will either make the wrong tool call or make additional calls when it suspects that one of them is correct.

This can hurt your business and your customers in a combination of ways:

- Your AI agents may inadvertently expose sensitive information (e.g., personally identifiable information) to unauthorized individuals

- The agents are slowed down—causing the time-sensitive workflows they support to perform poorly

- Your customers may see your AI agents make excessive tool calls—especially if they have to approve each. This can make your customers less motivated to use your agents

- When a workflow breaks, the process of identifying the root cause and addressing it becomes more complex

{{this-blog-only-cta}}

Poor maintenance leads to costly customer issues

MCP servers are often launched for marketing purposes; they help the MCP provider signal to the market that they’re keeping up with—and even on the cutting edge of—AI.

As a result, companies put little thought and resources into improving their MCP servers or fixing their bugs. This not only renders their servers ineffective but also risky to use with customers.

For example, you might use an MCP server that didn’t initially provide the correct schema definitions for sending invoices to clients for its “<code class="blog_inline-code">send_invoice</code>” tool—and the server still hasn’t addressed this.

If you and your customers are unaware of this issue, your customers can end up providing the incorrect parameters (e.g., you don’t include the currency), leading your AI agent to invoice end-users (your customers’ customers) by an inaccurate amount.

Related: Best practices for using an MCP server

Savvy hackers can steal sensitive data

Even if MCP servers have descriptive tools and are maintained properly, your AI agents can still accidentally expose sensitive information from them.

For example, a malicious actor could craft a prompt that manipulates the AI agent into revealing stored secrets, such as a customer’s API keys for several applications.

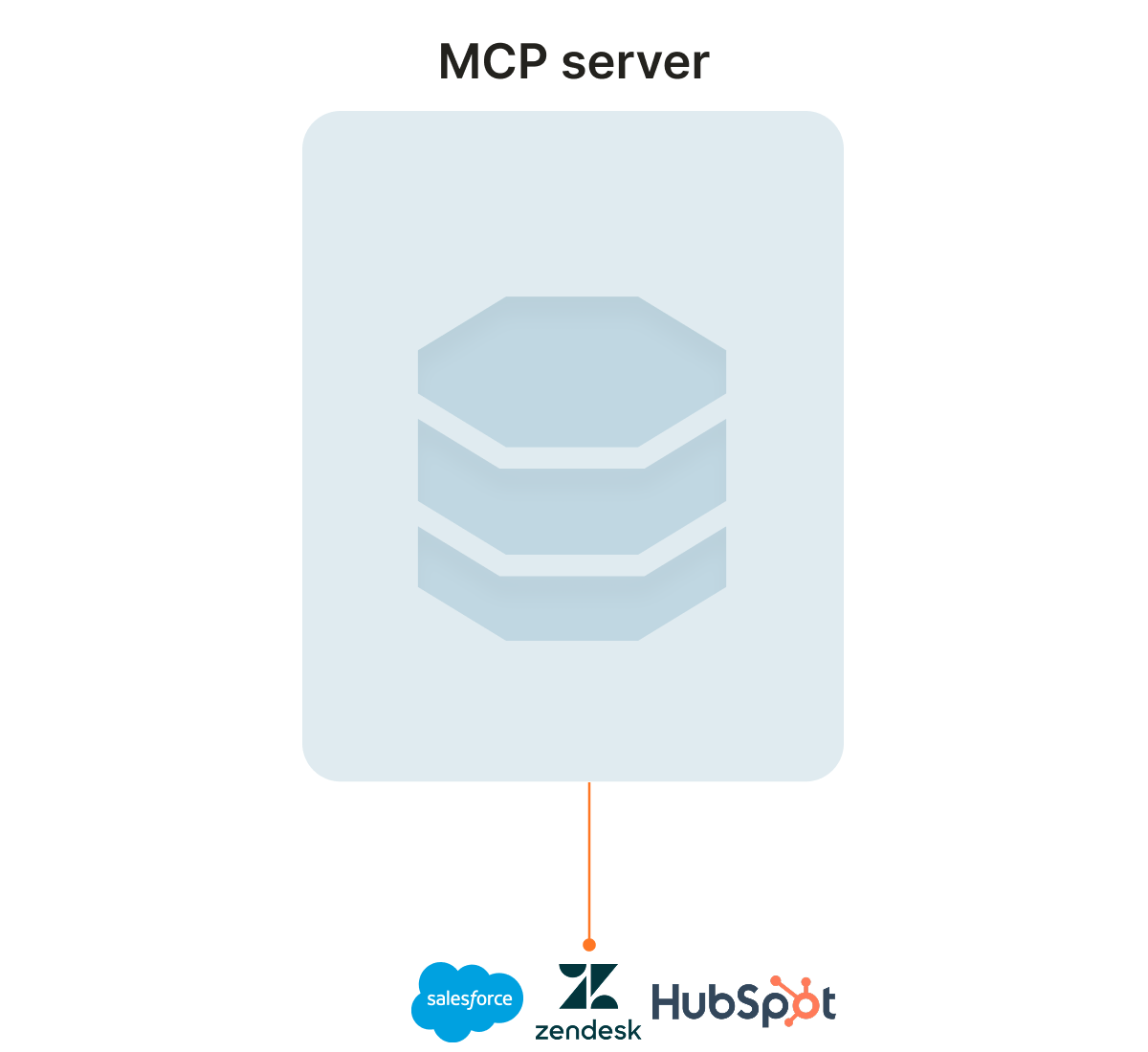

The attacker could then potentially access sensitive data—whether it’s employee records in an HRIS, sales prospects in a CRM, or support tickets in a help desk system.

Another budding security risk is the rise of fraudulent MCP servers, which are effectively MCP servers created by hackers and built for the sole purpose of stealing credentials and leaking sensitive information.

These servers include tricks, like “Tool Poisoning,” which is an emerging attack class where malicious tool metadata is crafted to mislead LLMs into unsafe behavior (e.g., credential requests disguised as legitimate actions).

Given all of the potential security incidents from using MCP servers, it’s little surprise that we’ve seen issues crop up from prominent ones—such as GitHub’s MCP server—and fraudulent servers are springing up in all kinds of industries, such as a weather MCP.

Related: A guide to AI agent observability

Extensive testing is time and resource consuming to carry out

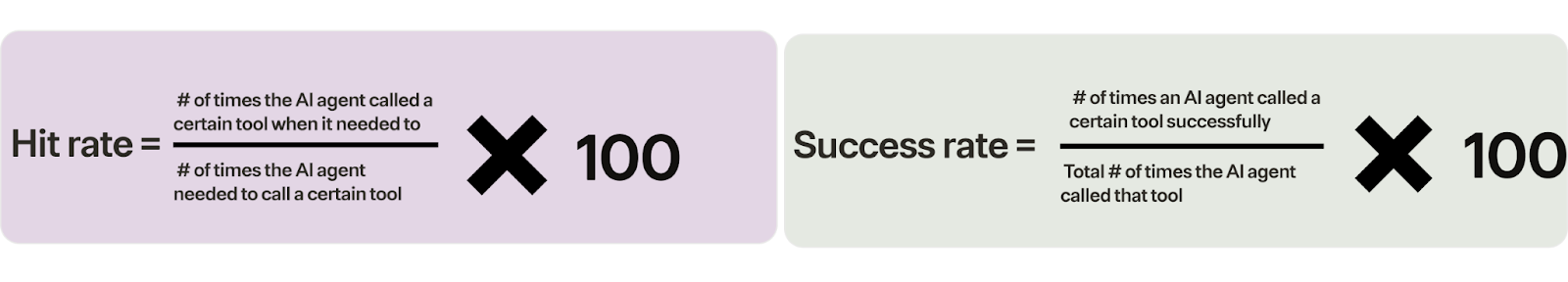

The stakes for using performant MCP servers is high, so you’ll need to perform extensive tests on each to ensure it meets your performance requirements.

Unfortunately, testing MCP servers is complex and time intensive.

It involves collecting sandbox data, documenting different test scenarios, measuring several metrics (as shown below), and analyzing the results.

Multiply this by all of the MCP servers you want to use and the amount of time and effort involved will only increase exponentially.

Poor support for enterprise search use cases

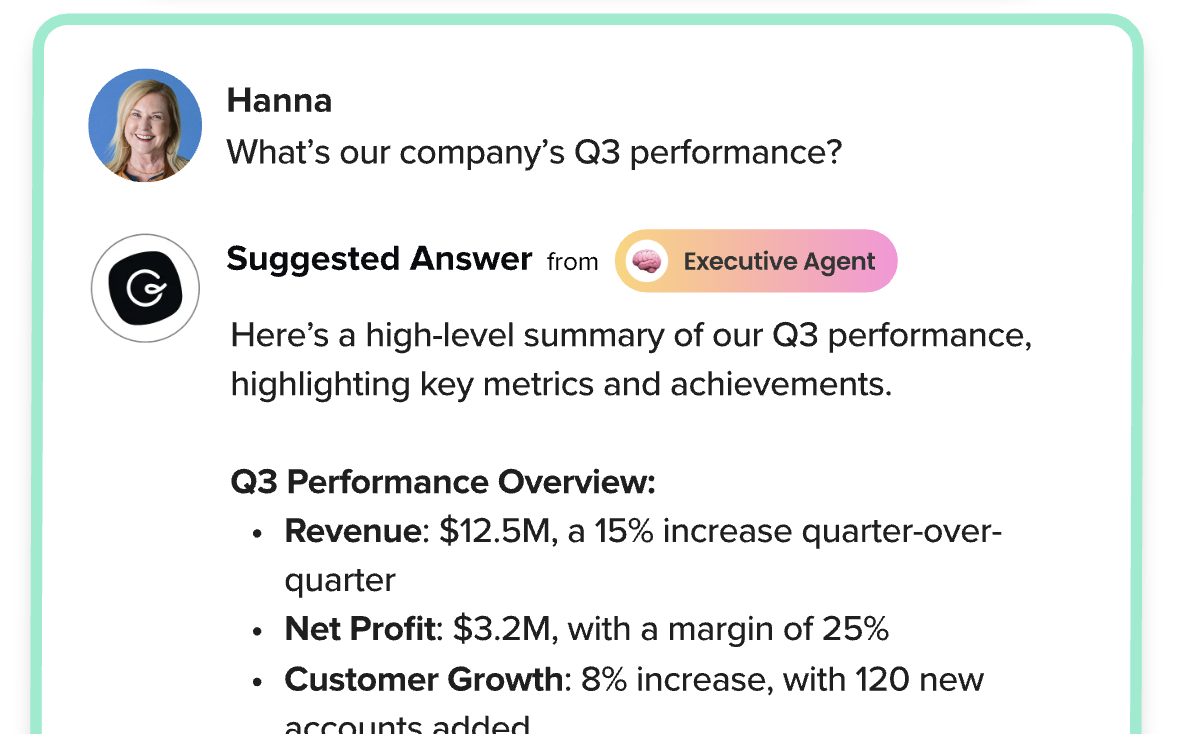

Say you’re building an enterprise search feature or functionality in your product to help users find information from their applications quickly.

In order to power your enterprise search, you’ll need to use semantic search to understand the intent behind a user’s query.

For example, if an executive is asking about their company’s performance last quarter, semantic search would enable your enterprise search product to offer all of the information that can help the executive understand their company’s performance that quarter.

MCP can’t support semantic search, as the protocol relies on the underlying APIs, which don’t support semantic understanding.

APIs only support fuzzy, or exact, string matching, which significantly worsens your enterprise search capabilities. For example, “What’s our company’s Q3 performance?” wouldn’t return anything; instead, the searcher would need to explicitly request the metrics they need (e.g., “What’s our cost of goods sold in Q3 of last year?”).

Excessive tools and descriptions can prevent tool call executions

While many MCP servers offer incomplete tools and/or poorly describe them, other servers provide too many tools and/or use lengthy tool descriptions.

The latter can cause your AI agents to waste compute parsing irrelevant or redundant tool metadata, which can end up timing out your AI agents because the context window gets overloaded and inference slows dramatically.

Even when calls succeed, deeply nested parameters may be overlooked, leading your AI agents to skip potentially relevant tools.

How Merge Agent Handler addresses these challenges

Merge Agent Handler, which provides a single platform to securely connect your AI agents to thousands of tools and monitor and manage any tool call, helps you avoid the challenges associated with using MCP servers by providing:

- Per-identity authentication at call time and least‑privilege boundaries on the connectors and tools an agent can use (via Tool Packs)

- Fully maintained MCP servers for a variety of 3rd-party applications

- Optimal tool names and tool descriptions

- Robust integration observability features (e.g., fully-searchable logs and customizable alerts) to help you detect and address potential security threats

- An Evaluation Suite that lets you test any tool and connector

Start using Merge Agent Handler today by signing up for a free account!

.png)

.png)