Table of contents

Semantic search: how to implement it for enterprise search

.png)

If you’re implementing search functionality in your product, there are generally two methods you can use: semantic search and fuzzy search.

We’ll break down why semantic search is the better option, how companies use it today, and some best practices for implementing it.

But first, let’s align on how each search method works.

Semantic search overview

It’s a type of search functionality that uses the intent behind a user’s search to provide nuanced and relevant results.

Here’s how it generally works:

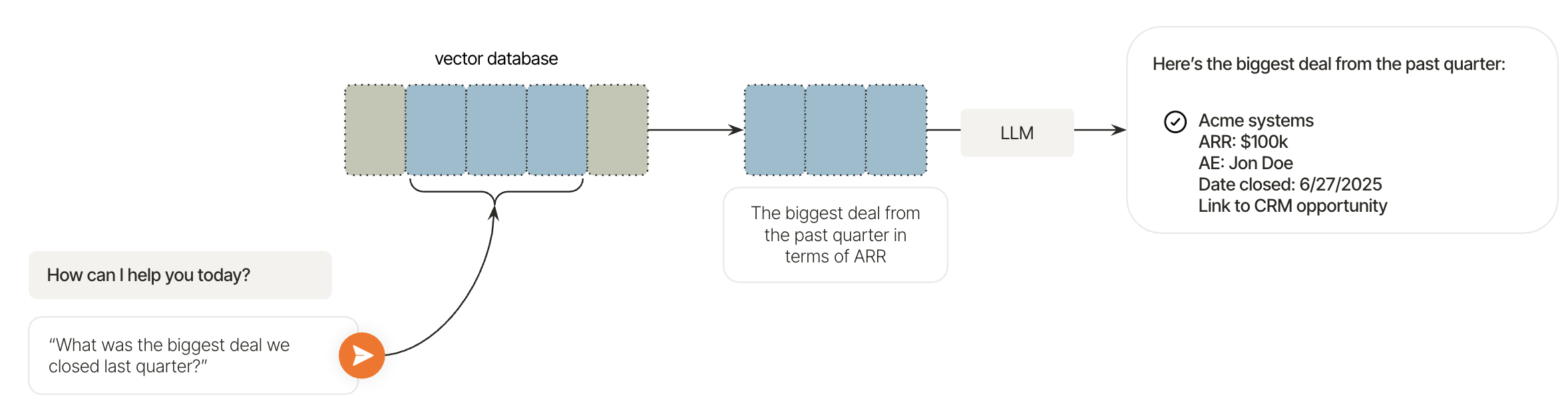

1. A user inputs a query like “what was the biggest deal we closed last quarter?”

2. That query is embedded, or turned into a vector representation.

3. The vector database (VD) retrieves semantically similar embeddings (e.g., deals closed, ARR values, dates) via a similarity search algorithm, like cosine similarity. In other words, it finds the most contextually relevant pieces of information to the user’s query, even if the exact words or phrases don’t match.

4. A large language model (LLM) can take those retrieved results, reason over them, and present an answer in natural language.

For example, based on the results, it can identify the biggest deal and all of the contextual information that would be helpful to the user, like when it was closed, the ARR figure, the rep who closed the deal, and where the deal lives in the CRM.

Semantic search vs fuzzy search

Fuzzy search, or approximate string matching, returns results based on string similarity.

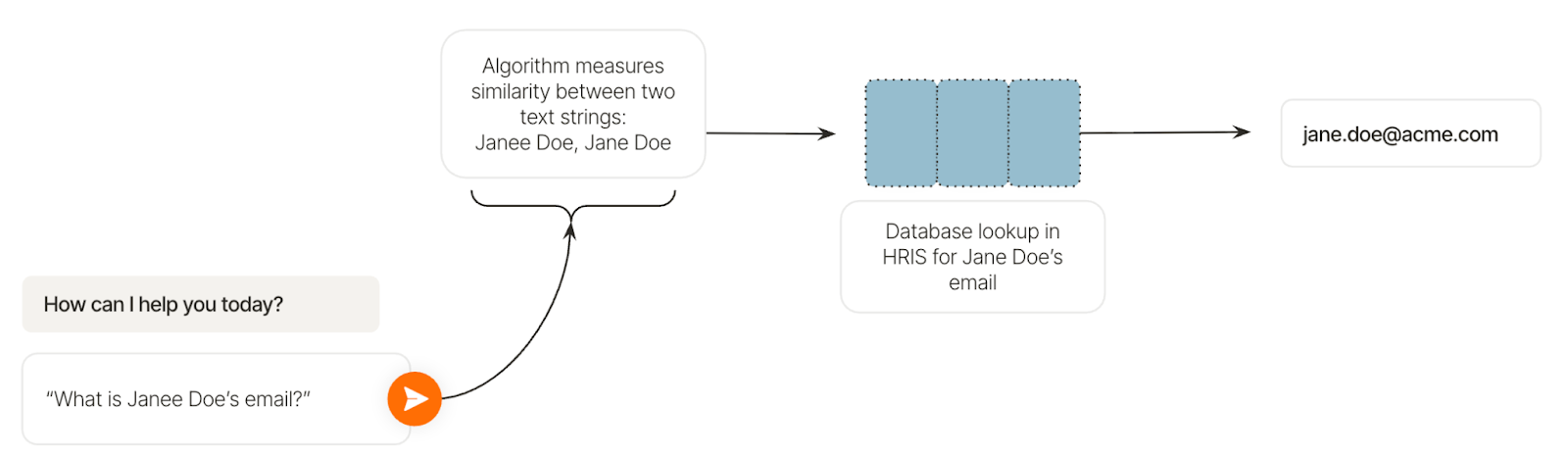

For example, if a user inputs something like “What is Janee Doe’s email?”, an algorithm that measures how similar two text strings are (e.g., Levenshtein distance) can determine that the user meant to input “Jane Doe.”

A database lookup for Jane Doe’s email is then performed in an HRIS, which identifies Jane’s email as jane.doe@acme.com. Once this happens, the email address gets shared with the user.

In short, fuzzy search lets users perform simple queries without having to worry about minor misspellings, while semantic search allows users to perform more complex, insightful, and actionable queries.

Real-world examples of semantic search

Here’s how companies currently use semantic search to power cutting-edge enterprise search experiences.

Guru

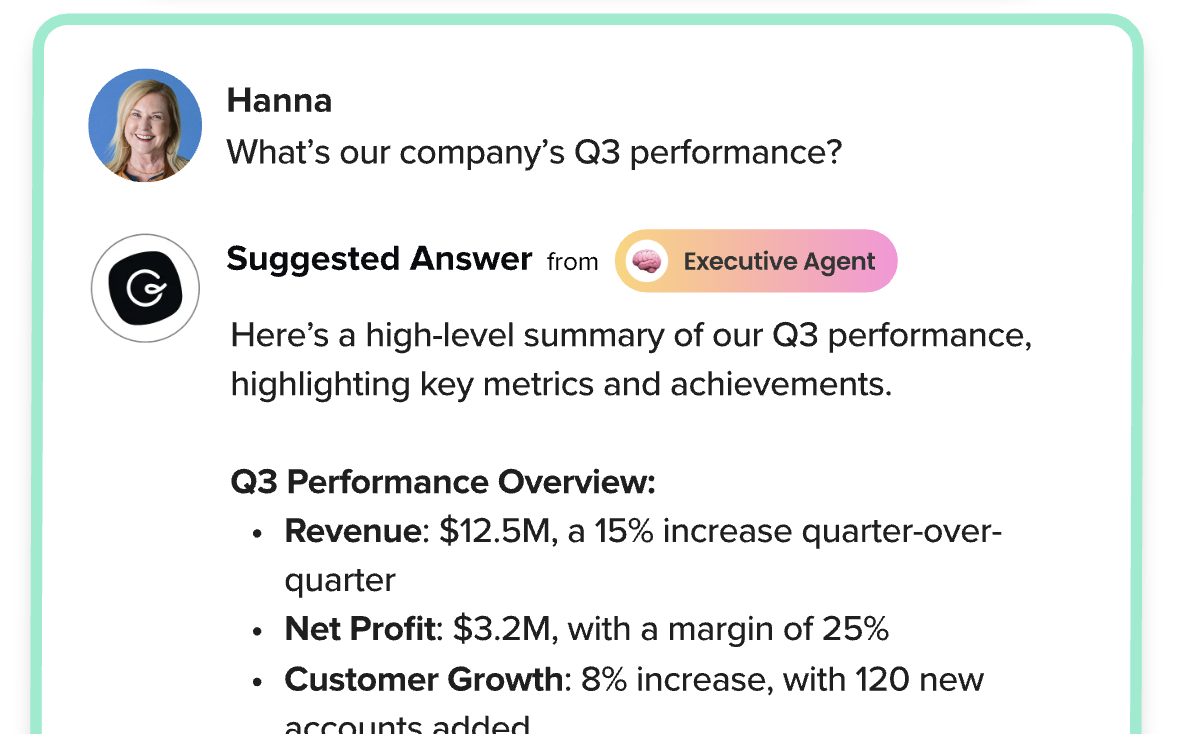

Guru offers an enterprise AI search platform. It lets employees find all kinds of information via semantic search and a vector database that can include embeddings from various data sources, such as file storage apps, ticketing systems, HRISs, and CRMs.

For example, an executive can ask Guru’s enterprise search how the company performed in a certain quarter, and Guru’s platform can use semantic search and an LLM to not only find the key metrics but also present them in a way that’s easy to understand and relevant to the user (in this case, an executive).

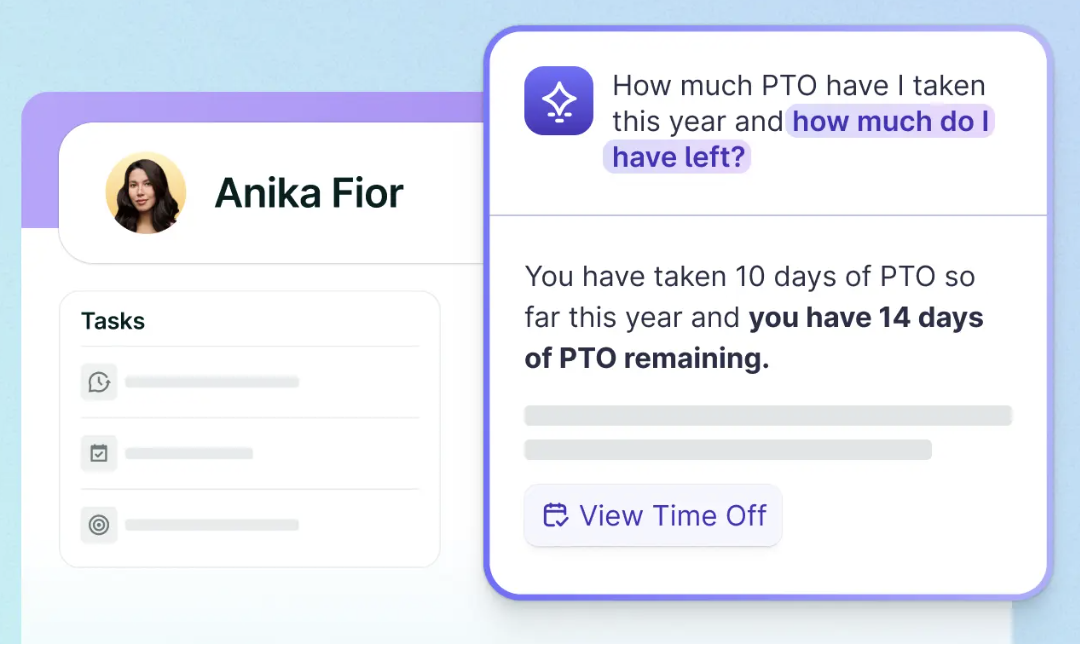

Lattice

Lattice, a leading HR platform, offers Lattice AI—a smart assistant that answers employee questions using both Lattice data and integrated external sources (like employee-related docs in Google Drive or SharePoint).

By leveraging semantic search over embedded corporate knowledge, Lattice AI enables employees to retrieve information—such as how much PTO they’ve used and how much remains—quickly and accurately.

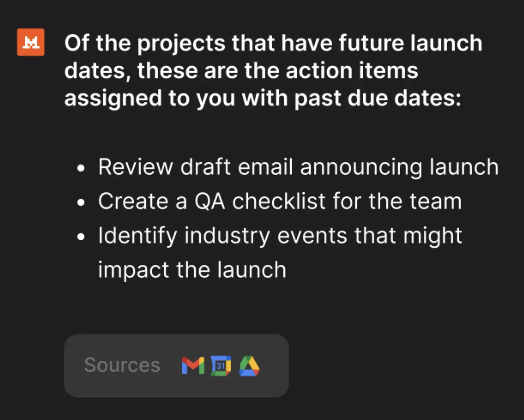

Mistral AI

Mistral AI, a leading LLM provider, offers Le Chat—a conversational assistant with semantic search across embedded documents, web access, and advanced research capabilities. Taken together, Le Chat helps users tackle complex and time-sensitive questions with structured, reference-backed answers.

For instance, a user can ask about their overdue action items and Le Chat can use its broad data access to provide all of them.

Best practices for implementing semantic search with 3rd-party apps

Here are some tips to help you implement semantic search with external data sources successfully.

Perform full data syncs

Performing full data syncs on 3rd-party integrations enables the retrieval system to access the most up-to-date records. In addition, syncing historical data allows your semantic search to capture historical patterns, edge cases, and supporting context that helps it provide more contextually-relevant and reliable insights.

Doing this in advance also ensures that the data is cleansed and normalized (e.g., deduplicated), which allows for more accurate embeddings.

Avoid real-time API queries

Real-time API calls come with several drawbacks that prevent you from implementing semantic search:

- Slow responses: Real-time APIs don’t maintain an index of embeddings on your behalf. This means that every API call would require you to clean, normalize, and embed the entire data set, leading to excessive computational costs and delayed responses

- Handling rate limits: Given the volume of requests you need to make across your user and customer base, managing API providers’ rate limits can quickly become complex, expensive, and lead to slow response times

- Limited query functionality: APIs typically just support keyword or exact match filters, which isn’t useful for semantic search

- Lack of control: API providers can experience outages, bugs, and a host of other issues that can cause your API calls to fail or get delayed—and there’s often nothing you can do to prevent it

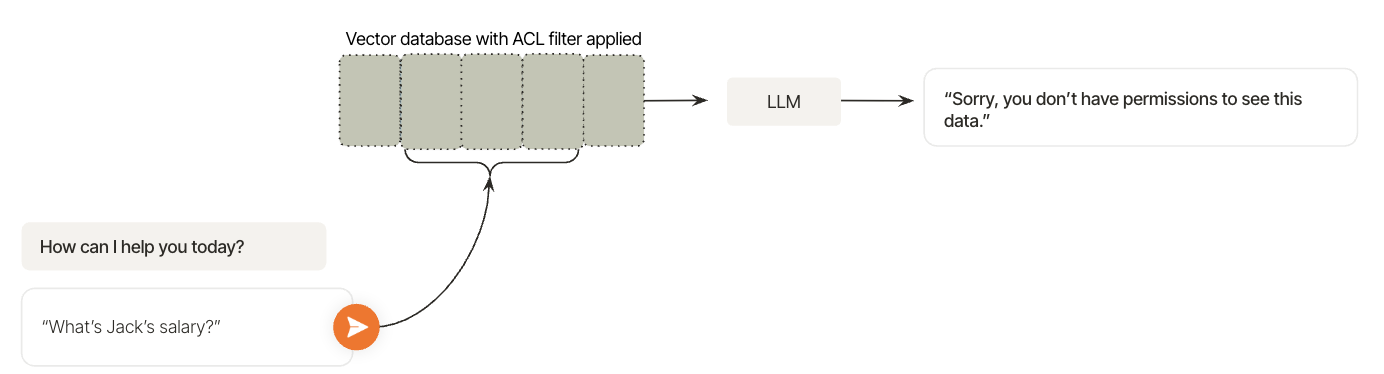

Adopt access control lists (ACLs)

ACLs let you only show data to the individuals who can access it in the underlying system(s). For example, if a user asks your enterprise search about their colleague’s salary and the user doesn’t have access to that data in the integrated HRIS, an ACL filter would prevent them from retrieving the figure.

Within the context of semantic search, ACL filters can be applied during the step of searching for semantically similar embeddings in a vector database to ensure that either no results or only authorized results get shared with your LLM.

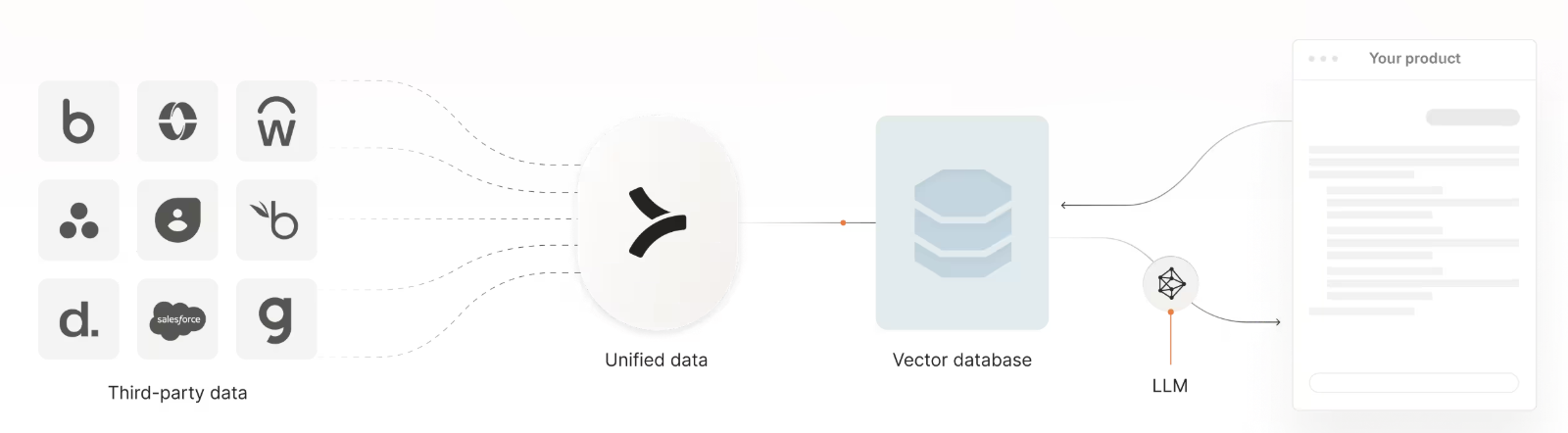

Leverage a unified API platform

A unified API platform, which offers a single API to access a whole category of integrations, lets you quickly add all the integrations you need.

The platform also normalizes all of the integrated data according to its predefined data models, ensuring you can embed it accurately before adding it to your vector database.

{{this-blog-only-cta}}

Semantic search FAQ

In case you have any more questions on semantic search, here are some commonly-asked questions—answered.

What are the benefits of semantic search?

Semantic search’s benefits vary based on its implementation and the type of enterprise search experience you offer. That said, its benefits typically include:

- Comprehensive query support: It can answer any number of questions, so long as the vector database includes high-quality and relevant embeddings

- Product differentiation: It can help you stand out from rivals (assuming they don’t also offer semantic search). This, in turn, can help you close more deals and retain more customers

- Support for agentic workflows: By pairing semantic search with your AI agent(s), you can implement a wide range of agentic use cases.

For example, if a user asks how much PTO they have left, your enterprise search can not only provide the answer but also display a “Request PTO” button. If clicked, it triggers one of your AI agents to kickstart a PTO request flow on behalf of the user.

How does semantic search compare to retrieval-augmented generation (RAG)?

RAG and semantic search both use embeddings to find semantically similar results in a vector database.

The key difference is what happens next: In RAG, the retrieved results are passed into an LLM, which combines them with the user’s query to generate a new answer. Semantic search, by contrast, can stop at retrieval. In other words, it can surface the most relevant results without involving an LLM.

How does semantic search compare to vector search?

Semantic search uses vector search to find vectors that are close to one another in the VD. But semantic search includes other steps, such as embedding the query and sharing the results.

.png)

.png)