Table of contents

5 ways to secure AI agents successfully in 2026

.png)

A single security incident from your AI agents can erode customer trust, your perception in the market, and put you at risk of violating compliance regulations.

Fortunately, there are several measures you can take to secure every AI agent you build and support.

We’ll cover the top ones. But first, let's explore why you need to secure AI agents in the first place.

{{this-blog-only-cta}}

Benefits of securing AI agents

Here are just a few reasons.

Prevents sensitive information from leaking

If you’re building AI agents to power critical workflows, they’ll likely require access to sensitive data—from employees’ social security numbers to customers’ billing information.

AI agents can make autonomous decisions that risk leaking this data. Combine this with threats like prompt injections, and this risk only grows.

Fortunately, you can use an AI agent observability solution to establish rules that govern how, exactly, these AI agents share data. And you can set up alerts for any rule violations.

Taken together, you can avoid sharing sensitive data with unauthorized 3rd-parties. And in the rare case it happens, your team can become aware of the issue quickly and work to resolve it effectively.

Mitigates the risks of AI agent sprawl

As your team builds more AI agents, it can be hard to keep track of the ones that are live in production, the systems they have access to, and the data they can receive and share.

In other words, a number of AI agents may share sensitive information with unauthorized individuals, and without a way to observe your AI agents at global and granular levels, your team wouldn’t be made aware of these issues on time.

To address this head on, you can leverage AI agent observability tooling that lets you analyze all of your AI agents across the organization. And you can build workflows on top of rule violations so that any time an AI agent violates a certain rule, the appropriate workflow gets triggered.

Gives you a competitive advantage

Many, if not most, of your competitors aren’t doing enough to secure their AI agents.

If you can take the most aggressive security steps and demonstrate them in a demo and/or through a proof of concept, you’ll stand out and be more likely to win over prospects.

https://www.merge.dev/blog/agentic-rag?blog-related=image

How to secure your AI agents

You can secure your AI agents by implementing the following yourself or by using a 3rd-party solution.

Establish rules on the data AI agents can receive and share

To prevent your AI agents from making tool calls that fall outside of their scope, you can set up explicit rules that serve as guardrails.

You can also apply these rules to all of your agents, specific groups, or individual ones. And you can use precise actions to better control how the AI agents can interact with specific types of data.

Here are just some examples of how these rules can look:

- Credit card numbers: All of your AI agents are blocked from receiving and sharing these numbers

- Email addresses: A specific group of employees at your company get notified when an AI agent shares or receives an email address

- Social security numbers: All of your AI agents receive redacted social security numbers (e.g., last 4 digits) and can only send redacted social security numbers to 3rd-party systems

Build alerts to get notified of any rule violations

Even with a comprehensive set of rules in place, AI agents can still violate them.

That’s why your team needs real-time visibility into incidents and the surrounding context.

This means tracking which rules were violated, which agents were responsible, when the violations occurred, and additional relevant metadata.

The tooling you use should also allow you to filter violations by tool calls, specific agents, rule categories, and more—helping your team identify trends and address broader security risks.

.png)

It should also support alerting workflows by integrating with tools like Slack, PagerDuty, and email service providers to notify the right people immediately when a rule is broken.

https://www.merge.dev/blog/rag-vs-ai-agent?blog-related=image

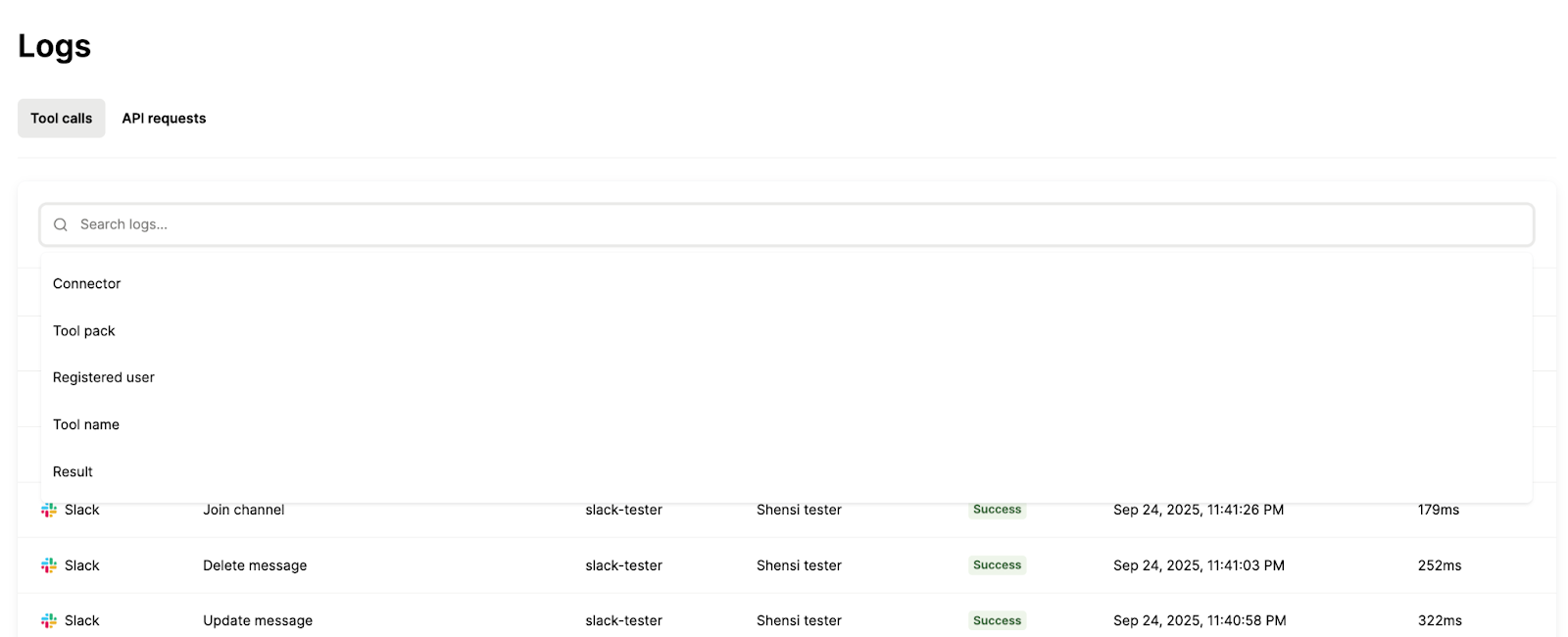

Use logs to track and troubleshoot individual tool calls

To understand why a certain tool call failed and potentially get more context on a specific security threat, you can use logs that tell you what tool call was made, when, the user who invoked it, the specific tool arguments that were used, what was returned, the status (success or failure), and more.

You should also incorporate filters so that you can find individual logs quickly. These filters can include rule violations, users, tool names, dates, and so on.

Require authentication before users can invoke AI agents

To confirm users’ identities and ensure they have permission to access and act on specific data, you should require them to authenticate through your AI agent first.

For example, if a user wants to create tickets in Jira via your AI agent, prompt them to authenticate with that Jira instance. The agent will only complete the task once authentication is successful and the user is authorized to create tickets.

https://www.merge.dev/blog/ai-agent-integrations?blog-related=image

Leverage an audit trail to detect and address risks caused internally

In rare cases, someone on your team can either inadvertently or intentionally modify your AI agents such that they become vulnerable to security and compliance issues. For example, a user can accidentally delete a rule that blocked your AI agents from sharing credit card information.

To pinpoint these incidents on time and address them successfully, you can use an audit trail that lets you see the changes made across your AI agents over time. This can include who on your team made the changes, when it happened, and descriptions that outline what they did.

And like tool call/API request logs, you can incorporate filters to find individual audit logs quickly, whether that’s by user or event type.

{{this-blog-only-cta}}

.png)

.png)