AI agent governance: key aspects, benefits, and platforms

.png)

You likely need your AI agents to make on-the-fly-decisions, like creating a support ticket on a rep’s behalf, updating the executive team on a product improvement, or enriching and routing leads to the right reps.

These agentic workflows, however, can lead to unintended and undesirable outcomes, such as sending sensitive information to the wrong individuals or inputting data into the wrong fields.

To help you reap the benefits of using AI agents and minimize their security risks, you can govern them.

We’ll walk through what this entails and the tooling you’ll need, but let’s first align on how AI agent governance works.

What is AI agent governance?

It’s a set of policies, controls, and processes that ensure AI agents operate safely, ethically, and compliantly across their lifecycle.

This involves using a platform that can authenticate tool calls, support enterprise-grade tools, and provide visibility on any tools that are invoked.

.png)

{{this-blog-only-cta}}

Key components of governing AI agents

Governing AI agents effectively requires you to adopt measures before and after an agent is pushed to production. Here are just a few to keep in mind:

- Enforcing authentication: Your AI agents should, by default, ask users to authenticate with the required systems before performing actions on any of the underlying data. And only once the user authenticates successfully, the agent would go on to make the necessary tool call(s)

- Testing tools rigorously: You should take several measures to fully battle-test your agents' tool calls, such as running representative, real‑world workflows; adopting different identities and scopes and seeing if least-privilege boundaries are enforced; and measuring certain test metrics, like success rate and latency to benchmark performance

- Leveraging audit trails in your agentic tooling: This gives you person-level accountability and granular visibility into every action made on your agents’ tools, allowing you to enforce policies, investigate issues, and prove compliance

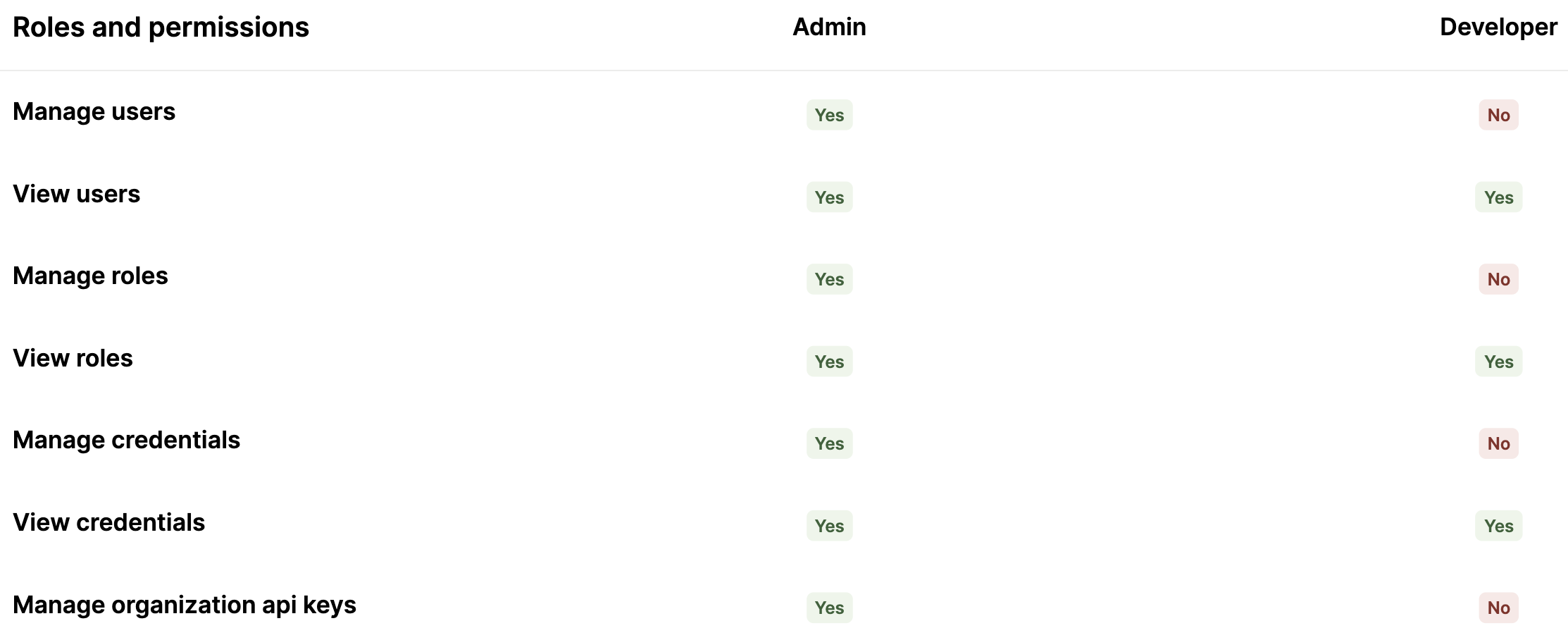

- Using role-based access controls: Create and assign users with specific roles (e.g., read-only) to prevent them from adding and/or modifying certain connectors, tools, user access, and more

- Accessing logs: These logs should be fully-searchable and include details like when a tool was invoked, how long the call took end-to-end, the user who invoked it, the arguments that got passed, the actions that were taken, etc.

- Establishing rules: Assign rules that dictate how specific agents and users interact with certain types of data. For example, an agent can share specific kinds of information (e.g., email addresses), but those actions need to be logged somewhere

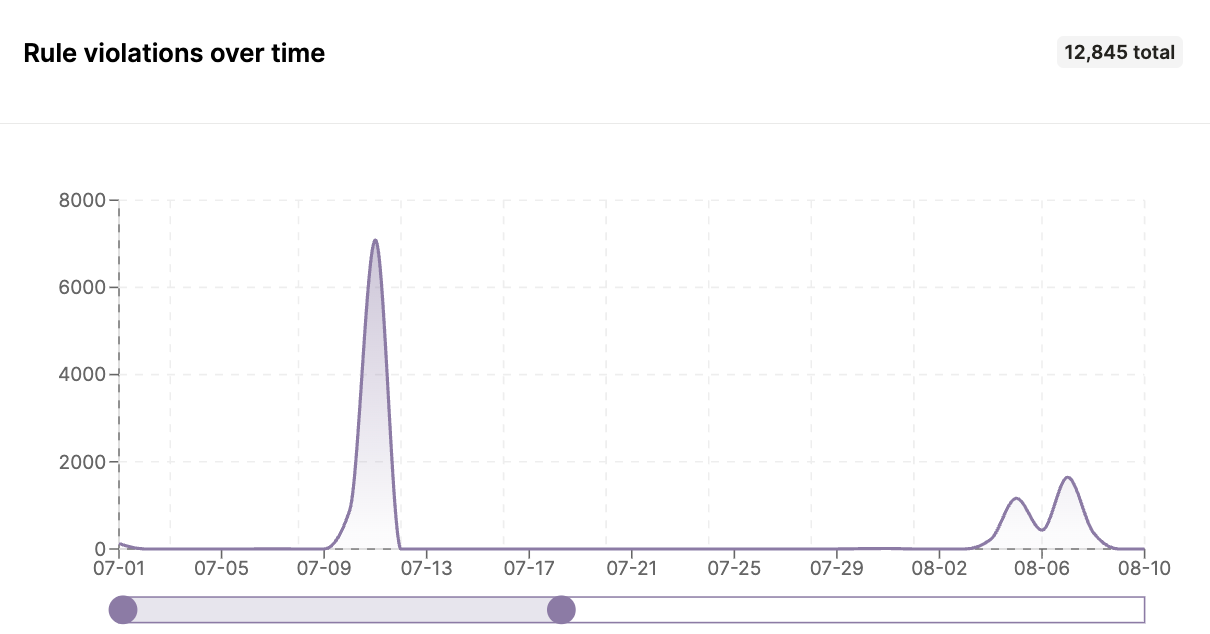

- Implement rule violation-based alerts: If and when rules are violated, your team can receive an alert and important context on what transpired, such as when the violation occurred, the data that was involved, the tool that was used, and so on

Related: How to manage AI agents

Why AI agent governance is important

It comes down to several factors:

Prevents harmful data leaks

AI agents make decisions on-the-fly based on a long-tail of unexpected inputs. As a result, their decisions may not always be what you’d expect or desirable.

Effective AI agent governance ensures that even if AI agents generate incorrect decisions, they can’t go on to take the associated actions, such as sharing sensitive credentials or writing records to the wrong system.

Enables you to comply with data privacy and security requirements

This comes down to a number of reasons that, taken together, allow your AI agents to follow the principle of data minimization, handle sensitive data appropriately, and provide accountability and traceability.

More specifically:

- Users can’t access additional data via governed agents. Access is strictly tied to authenticated user permissions

- Agents can be blocked from sharing and receiving disallowed data, and sensitive fields (like PII) can be automatically redacted or filtered

- Logs and audit trails can support compliance reporting and help your team navigate any security incidents

- Rules ensure agents can’t take unsafe or noncompliant actions

Helps you foster user trust

Most users aren’t accustomed to using AI agents in their day-to-day workflows. As a result, if they experience or hear about performance or security incidents from using your agents, they can quickly decide to avoid them and continue working in ways they’re comfortable with.

Governing AI agents effectively doesn’t guarantee that they’ll be reliable and performant, but it significantly increases the chances that they are.

Related: Why observing AI agents is important

How to evaluate AI agent governance platforms

Aside from evaluating the platforms’ core governance functionality, it’s worth looking for the following:

- Stable and reputable companies: Many AI agent management companies are just launching and have little funding. Since you’re likely looking to support AI agents long-term, the tooling you use to govern them should also have a solid financial foundation

- Existing customer base: If leading AI and SaaS companies have adopted a solution, it’s a strong signal that the solution is the best—if not one of the best—offering in the market. For example, Perplexity, the AI-powered answer engine, leverages Merge Agent Handler

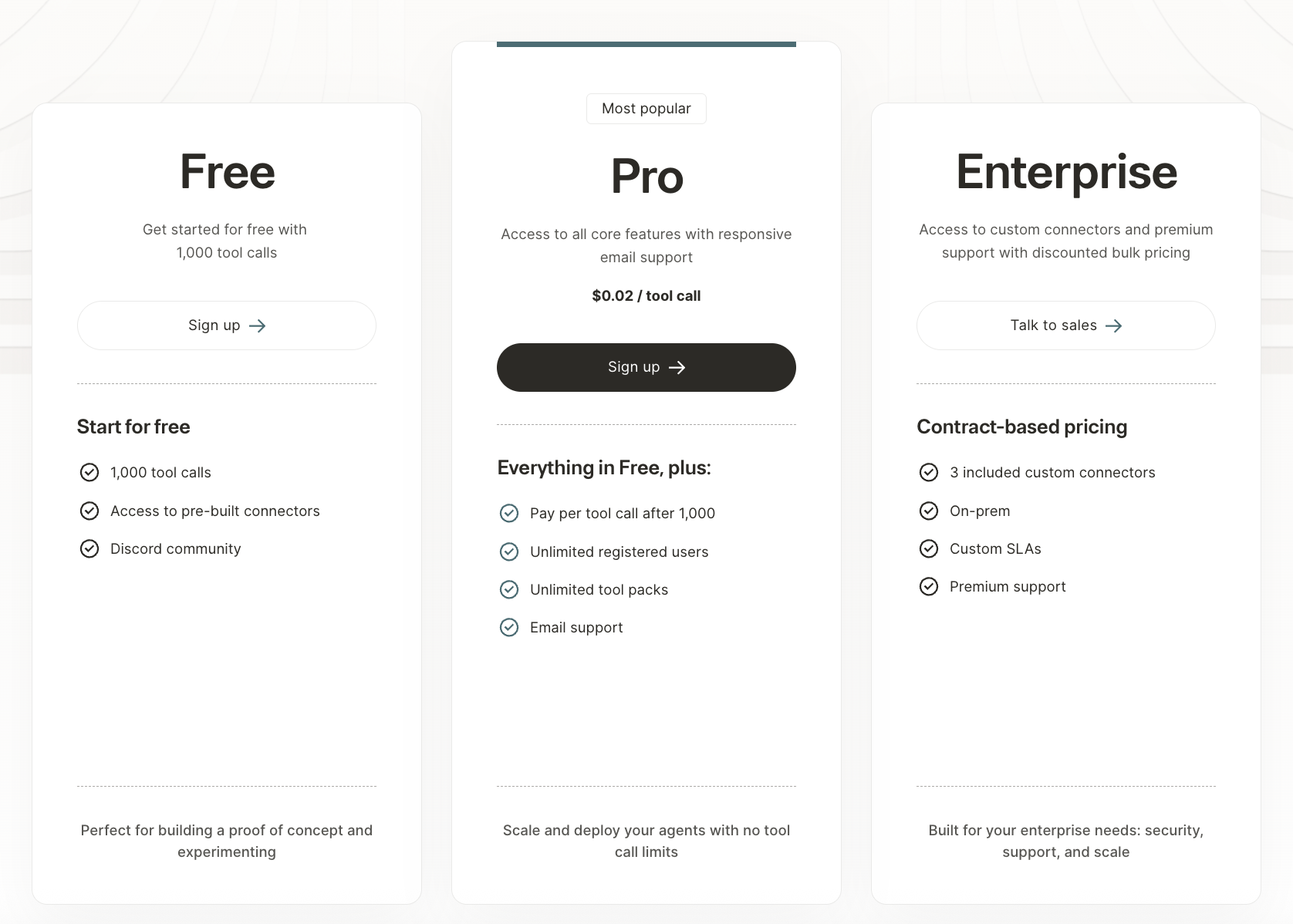

- Freemium pricing: Certain agent governance platforms, like Merge Agent Handler, enable you to sign up for free to evaluate their tools, testing capabilities, logging functionality, and more

Given how new this software category is, it’s worth taking advantage of these plans and validating these nascent solutions.

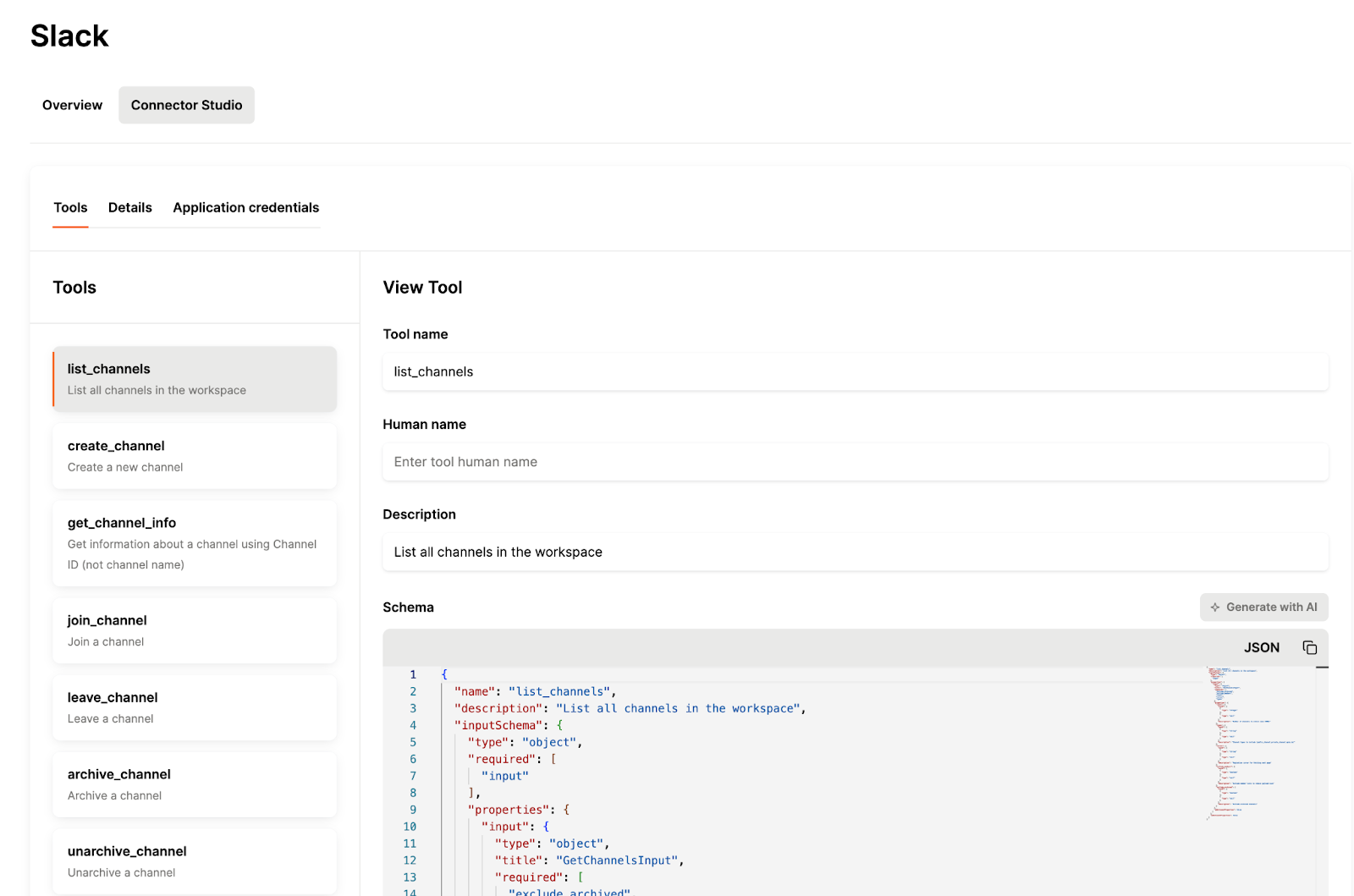

- Flexible connectors and tools: Your agentic use cases will likely evolve in unexpected ways. With this in mind, you should prioritize agent governance platforms that let you create or modify existing connectors and tools—while still respecting your governance policies and processes

Related: A guide to AI agent observability platforms

AI agent governance FAQ

In case you have any more questions on governing AI agents, we’ve answered several popular questions below.

What are the challenges of governing AI agents?

There are several you might experience:

- Hallucinations can lead to unauthorized actions. Even with the proper governance in place, your agents can misinterpret inputs and use them to perform potentially harmful actions, like sharing Social Security numbers with unauthorized individuals

- Agents can be difficult to govern at scale. As you offer more agents, the process of implementing and maintaining governance rules for each can be time intensive and technically complex

- Building workflows around rule violations can prove difficult. While you can use a 3rd-party solution to observe your agents and see when they violate a rule, diagnosing and addressing underlying issues that drive agents’ behaviors is often significantly more complex. It requires your team to trace the agent’s decision-making process, interpret model outputs, analyze contextual factors (e.g., prompt structure), and more

- AI agents’ non-deterministic nature makes it difficult to define governance rules in advance. You can attempt to predict an agent’s security and performance issues, but there’ll inevitably be edge-case behaviors that are impossible to forecast and that can compromise your business and your customers

What are some examples of governing AI agents?

Here are just a few:

- Product updates for executives: If an agent is tasked with sharing product updates with executives in Slack, you can set governance rules that prevent it from sharing those updates in other channels, using personally-identifiable information (PII) in the updates, and more

- Flagging product bugs: If an agent needs to create tickets in an app like Linear when product bugs get diagnosed, you can set governance rules to ensure the agent only shares the tickets in Linear, assigns them to someone in product, and avoids adding sensitive customer information in the tickets’ descriptions

- Prospect communications: If your product uses an AI agent to chat with prospects on your site, you can set governance rules that limit its responses to verified product information and prevent it from sharing internal documentation or roadmap details

What ethical consideration is most important when governing AI agents?

The most important consideration is how your AI agents can potentially harm your employees, customers, and prospects. To that end, you should prioritize governance rules that minimize the amount of data your agents share on individuals and limit their access to sensitive systems and PII.

How can MCP tools remain secure when AI agents have autonomous access to them?

This comes down to effective MCP governance of MCP tools.

You should:

- Enforce authentication mechanisms across tools, like OAuth 2.0, so that unauthorized users can’t use your agents to access and use sensitive data

- Use role-based access controls and audit trails to prevent colleagues from compromising your agents’ security (e.g., making OAuth 2.0 optional for a given connector)

- Set up alerts for scenarios that hint at security threats, such as repeated authentication failures or when a tool starts receiving a high volume of requests

How can I test AI agent behavior in a controlled environment before production deployment?

You can use third-party tools, like Merge Agent Handler, to generate realistic test data and simulate user prompts. From these prompts, you can assess whether your agents invoke the correct tools, pass the appropriate arguments, execute tool calls efficiently, trigger the right error-handling flows for specific failures, and more.

You should also perform these tests across combinations of your supported connectors, the large language models (LLMs) your agents can run on, the different user roles available, among other variables, to fully pressure-test your agents’ behavior before pushing them to production.

What governance frameworks should I establish for AI agents accessing multiple integrated systems?

You should begin by defining clear access policies that specify which agents can interact with which MCP servers and tools.

On top of this, implement approval workflows for granting new tool permissions, conduct regular access reviews (e.g., every quarter), and establish incident response procedures for situations in which agents behave unexpectedly or produce risky outputs.

Finally, document your governance framework thoroughly and ensure all relevant stakeholders—from engineering to security—understand their responsibilities in maintaining safe, reliable, and auditable AI agent operations.

.png)

.png)