Table of contents

A2A vs MCP: how they overlap and differ

.png)

If you’re building AI agents, it’s easy to conflate key concepts and standards that differ in meaningful ways.

At the top of this list are the Agent-to-Agent (A2A) protocol and the Model Context Protocol (MCP).

We’ll break down how each works, showcase examples of using them, and compare them directly at the end.

What is A2A?

The Agent-to-Agent protocol is an open standard from Google that outlines how AI agents can understand and interact with one another.

The protocol includes:

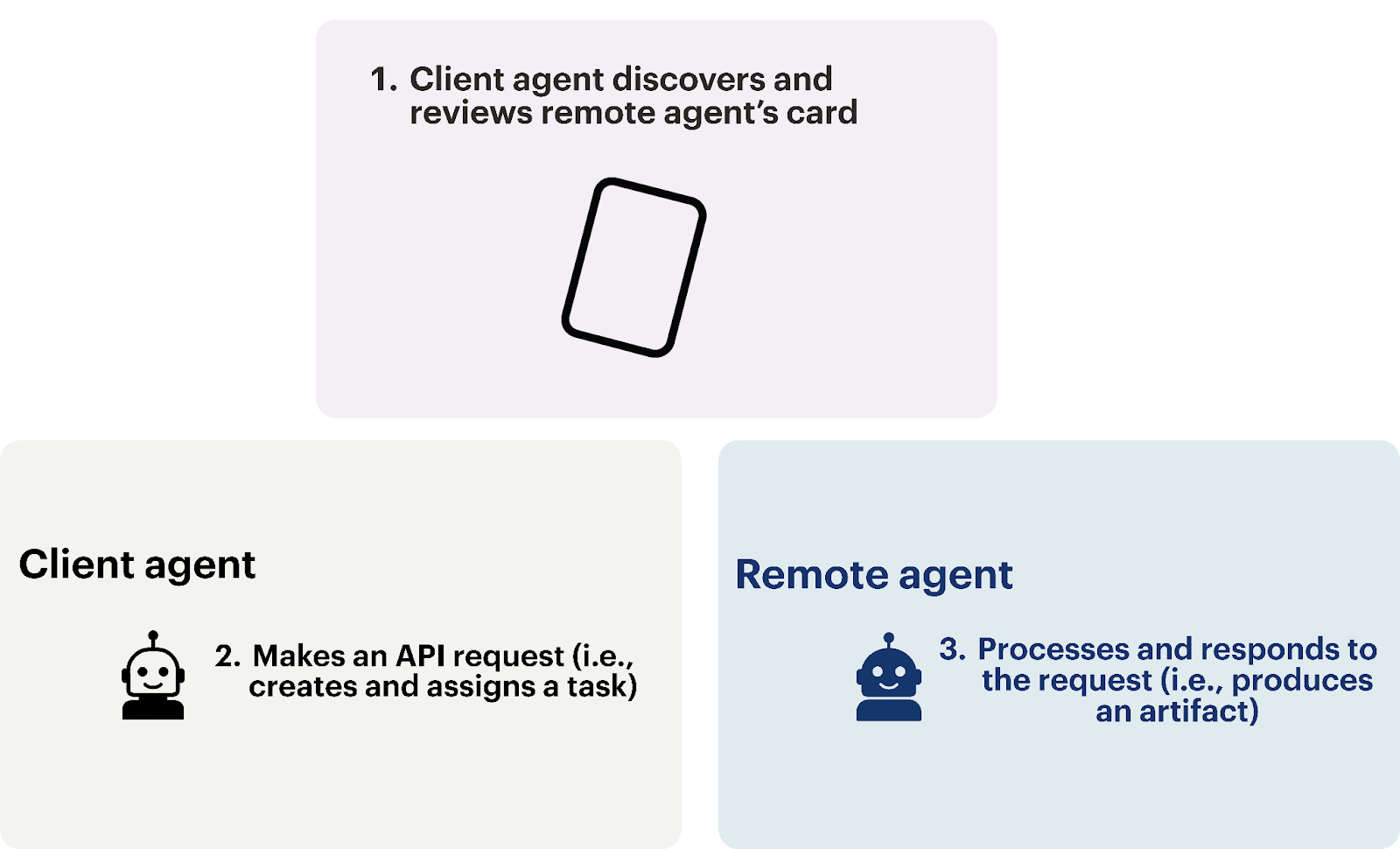

- Client agents: This AI agent requests information from or makes a request to a remote AI agent (i.e., it formulates and delivers a task).

- Remote agents: This AI agent receives and responds to the task

- Agent Cards: Each AI agent has a card, or a public JSON metadata file, that includes its purpose, name, API endpoint, authentication mechanisms, supported data modalities, and more. All of this context helps the client agent discover and identify the appropriate remote agent before assigning any a task

- Tasks: These are API requests from the client agent to the remote agent. The tasks can request information from the remote AI agent or ask it to perform an activity.

- Parts: Regardless of the request, a task includes “parts,” or specific types of data. This includes <code class="blog_inline-code">TextPart</code> for plain text, <code class="blog_inline-code">FilePart</code> for files, and <code class="blog_inline-code">DataPart</code> for structured JSON data

- Artifacts: This simply refers to the remote agent’s outputs

The A2A protocol can support countless internal and customer-facing scenarios.

For example, say you’re trying to build a fully autonomous lead routing workflow. Here’s how the A2A protocol can support this:

1. Once a lead comes in, your marketing team’s AI agent (the client agent in this case) can enrich the lead with external data sources and then determine the lead’s level of fit based on predefined criteria.

2. If the lead is deemed high fit, the AI agent creates a task for another AI agent (the remote agent) to share the lead with the assigned sales rep and provide guidance on how to follow up.

3. The remote agent can use relevant historical data in your CRM (e.g., similar leads that have recently been updated to closed-won) to generate helpful guidance for the sales rep in an app like Slack.

Note: The A2A protocol can support significantly more complex workflows that involve several AI agents.

{{this-blog-only-cta}}

What is MCP?

MCP is an open standard from Anthropic that defines how large language models (LLMs) can interact with 3rd-party applications.

The protocol is made up of:

- An LLM: This can include LLMs from Google (Gemini), Anthropic (Claude), OpenAI (ChatGPT), among others

- An MCP client: A component within the LLM that establishes and manages the communication with an MCP server

- An MCP server: A software application that hosts tools, or exposed data and functionality that the MCP client can interact with

For example, say you offer a recruiting automation platform that uses an LLM to provide customers with high-fit candidates for a given role.

To facilitate this, you can integrate your LLM with customers' applicant tracking systems via MCP (assuming your clients’ ATSs support MCP servers or have MCP-compatible interfaces).

Once connected, your LLM can call MCP tools to retrieve open roles and relevant historical hiring data—including candidates who’ve received and accepted offers. The LLM can then combine this context with your internal candidate database to recommend top matches for each role.

Given all of the differences between MCP and A2A, you might still be wondering how, exactly, they compare. We’ll provide a concise breakdown next.

Related: How MCP compares to RAG

MCP vs A2A

MCP and A2A are both open standard protocols that let you automate business or product workflows, but they support fundamentally different use cases. MCP facilitates integrations between LLMs and 3rd-party data sources, while A2A supports interoperability between AI agents.

In other words, you would use MCP for any scenario that requires an AI agent to make on-the-fly decisions with 3rd-party applications. And you would use A2A whenever an AI agent needs to interact with another AI agent, whether it’s to pass off a task, request information, or coordinate on solving a problem.

Give your agents access to thousands of enterprise-grade tools

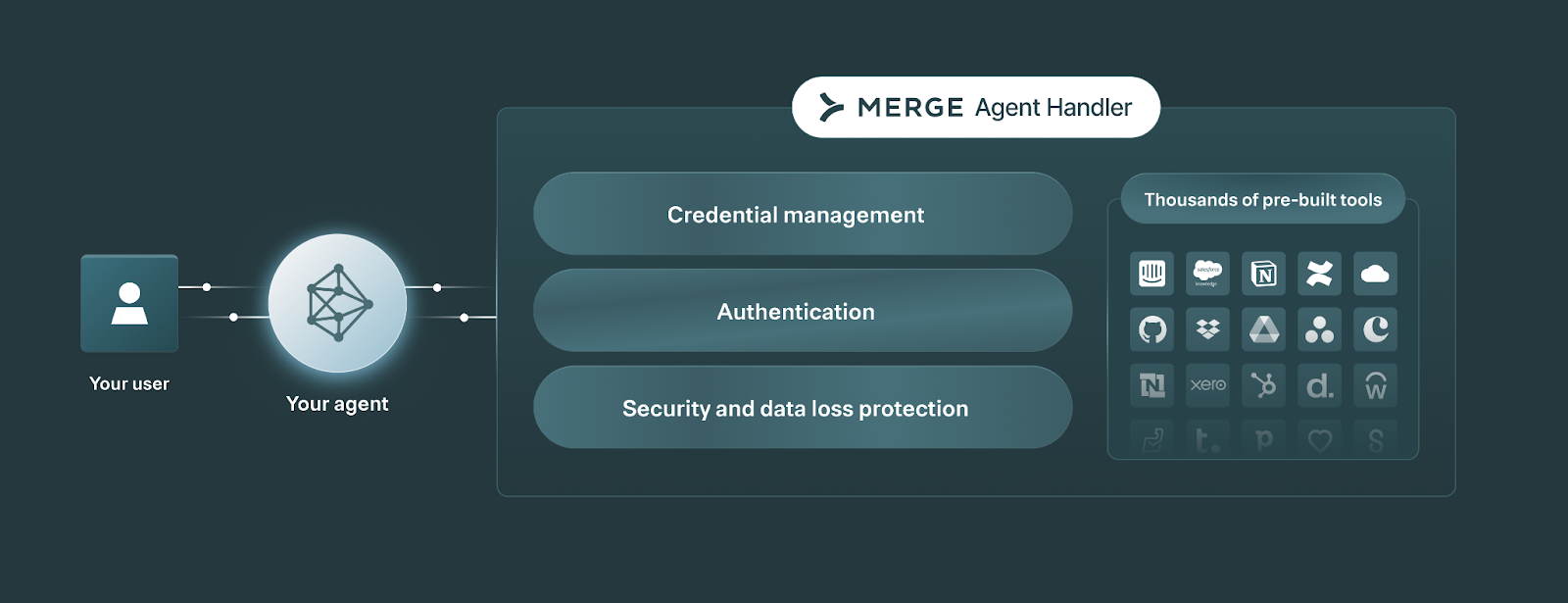

Merge Agent Handler lets your AI agents securely access over a thousand tools.

Agent Handler also provides the tooling you need to test, monitor, and manage your agents’ tool calls. This includes a comprehensive evaluation suite to benchmark any tool call’s performance, fully-searchable logs to drill into individual tool calls and API requests, customizable alerts so teams can detect and resolve issues quickly, and more.

Test Merge Agent Handler for yourself by signing up for a free account!

FAQ

In case you have any more questions on A2A or MCP, we’ve addressed several more below.

Can A2A replace MCP?

A2A can potentially replace MCP in scenarios where the remote agent can access and interact with relevant data in a target 3rd-party system. However, since this often isn’t the case, A2A can’t fully replace MCP.

Which is better, A2A or MCP?

Neither is necessarily better than the other, as the better approach will depend on your integration scenario and the AI agent(s) at your disposal. That said, since MCP allows your AI agent to access a 3rd-party application directly, it’s often easier and more efficient to use than A2A.

How does RAG differ from A2A and MCP?

RAG, or retrieval-augmented generation, is an approach that an AI agent or an enterprise search solution can use to generate personalized, up-to-date, and accurate outputs.

In other words, it isn’t a protocol that an AI agent needs to follow, but merely an approach to improving an underlying LLM’s outputs.

RAG often overlaps with A2A and MCP in that AI agents can use these protocols as a step in a RAG pipeline. For example, an AI agent can make a tool call in an MCP server to retrieve relevant data. The AI agent can then use this context to generate better outputs.

.png)

.png)

.png)