Table of contents

How to integrate with the Google Drive API via Python

.jpg)

Whether you're building internal tools, customer-facing apps, or data pipelines, connecting to Google Drive can add significant value.

With this in mind, we'll show you how to build reliable Python integrations with the Google Drive API. This includes essentials like authentication and core file operations, such as uploading and downloading.

We'll also cover some best practices and share some use cases we're seeing in the real world.

Prerequisites

Before getting started, make sure you have the following prerequisites:

- Python 3.7 or higher installed on your system

- Google account that you can use for testing the integration (with Google Drive accessibility). This account will own the files you upload or download during testing

Related: Every step you need to take to integrate with the Dropbox API

Set up a project in the Google Cloud Console

Before you can interact with the Google Drive API, you need to set up a project in the Google Cloud Console.

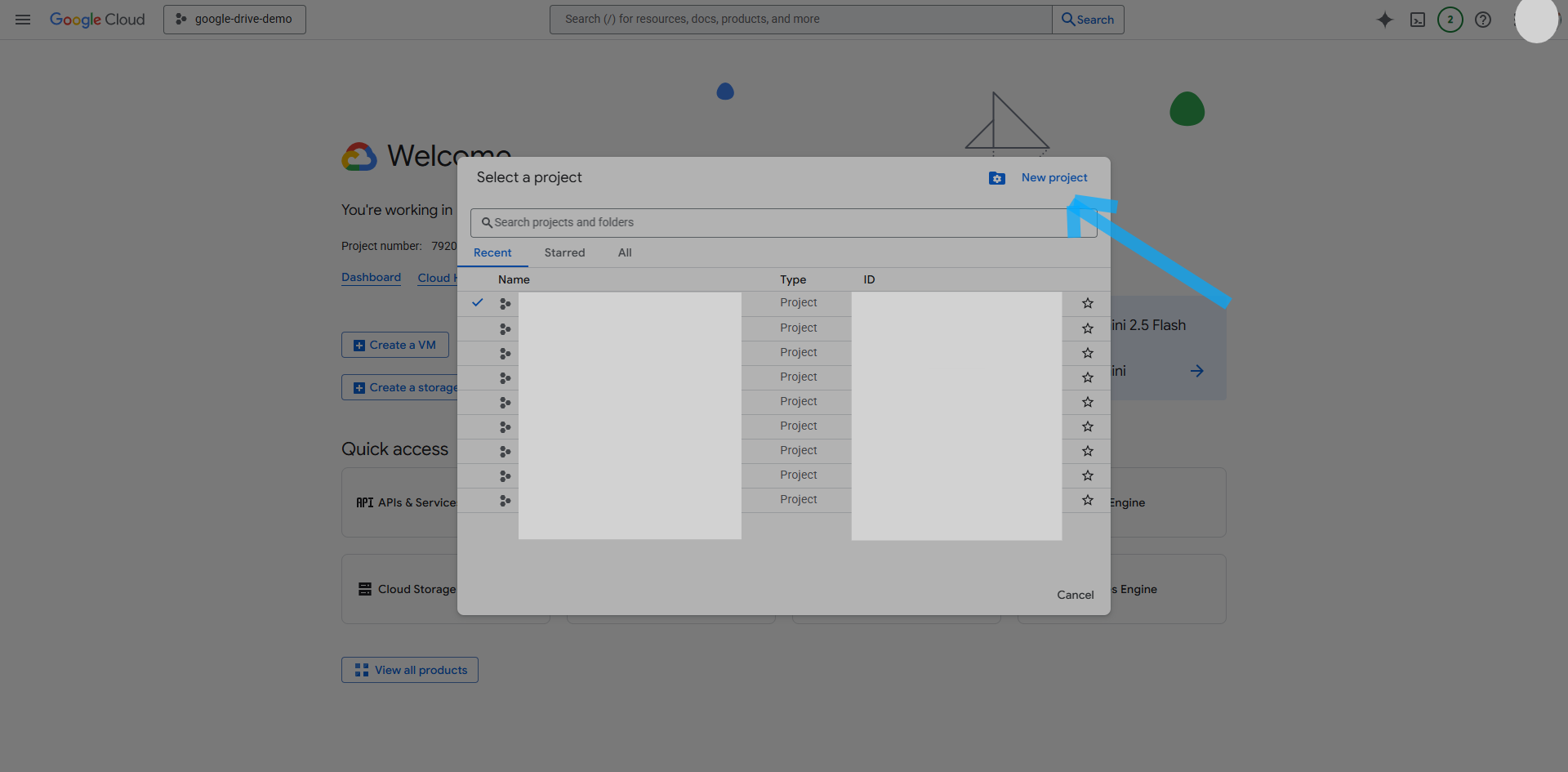

Start by navigating to the Google Cloud Console. If you don't already have a project, click the project drop-down at the top of the page and select New Project:

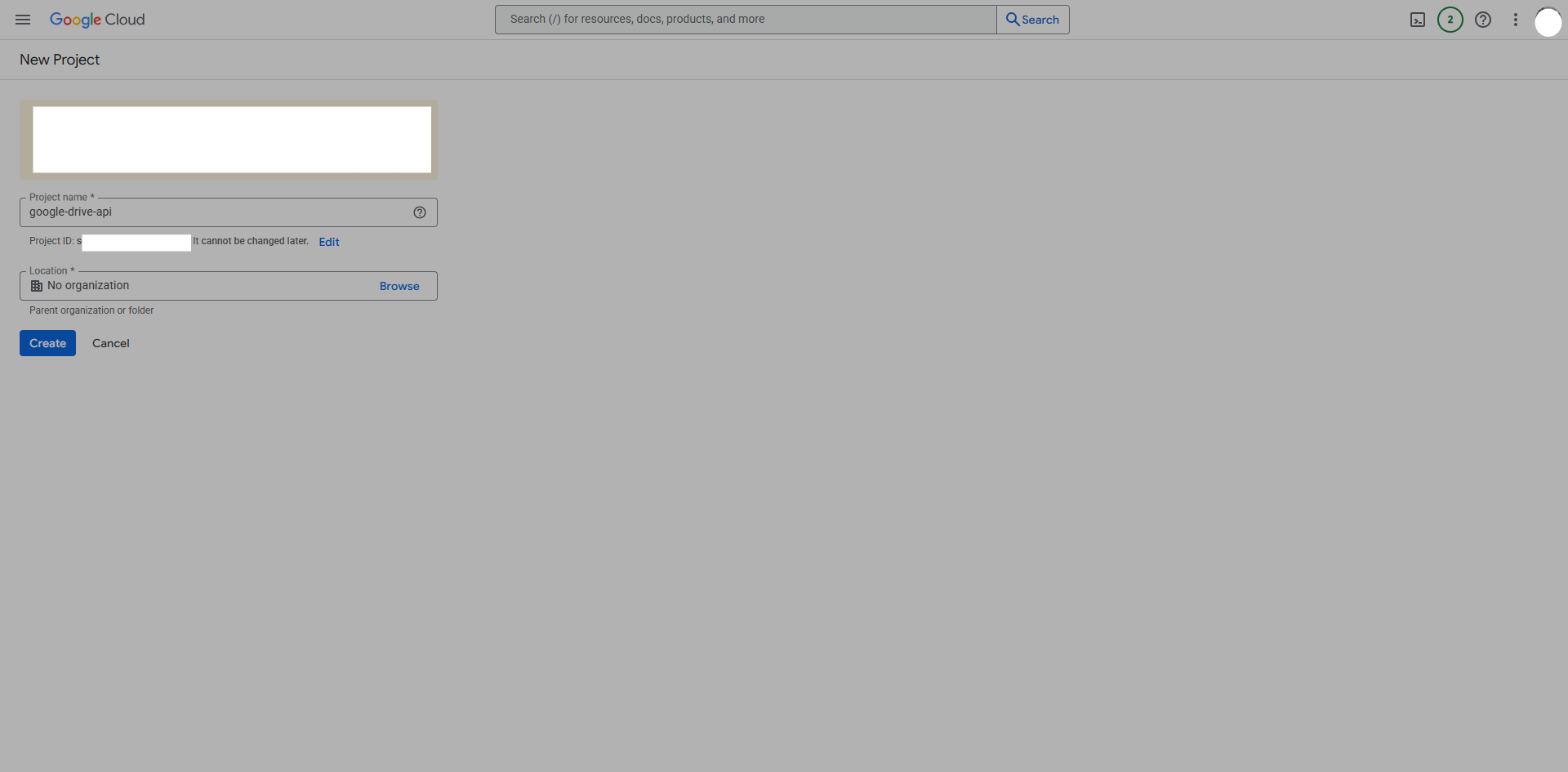

Give your project a name and then click Create:

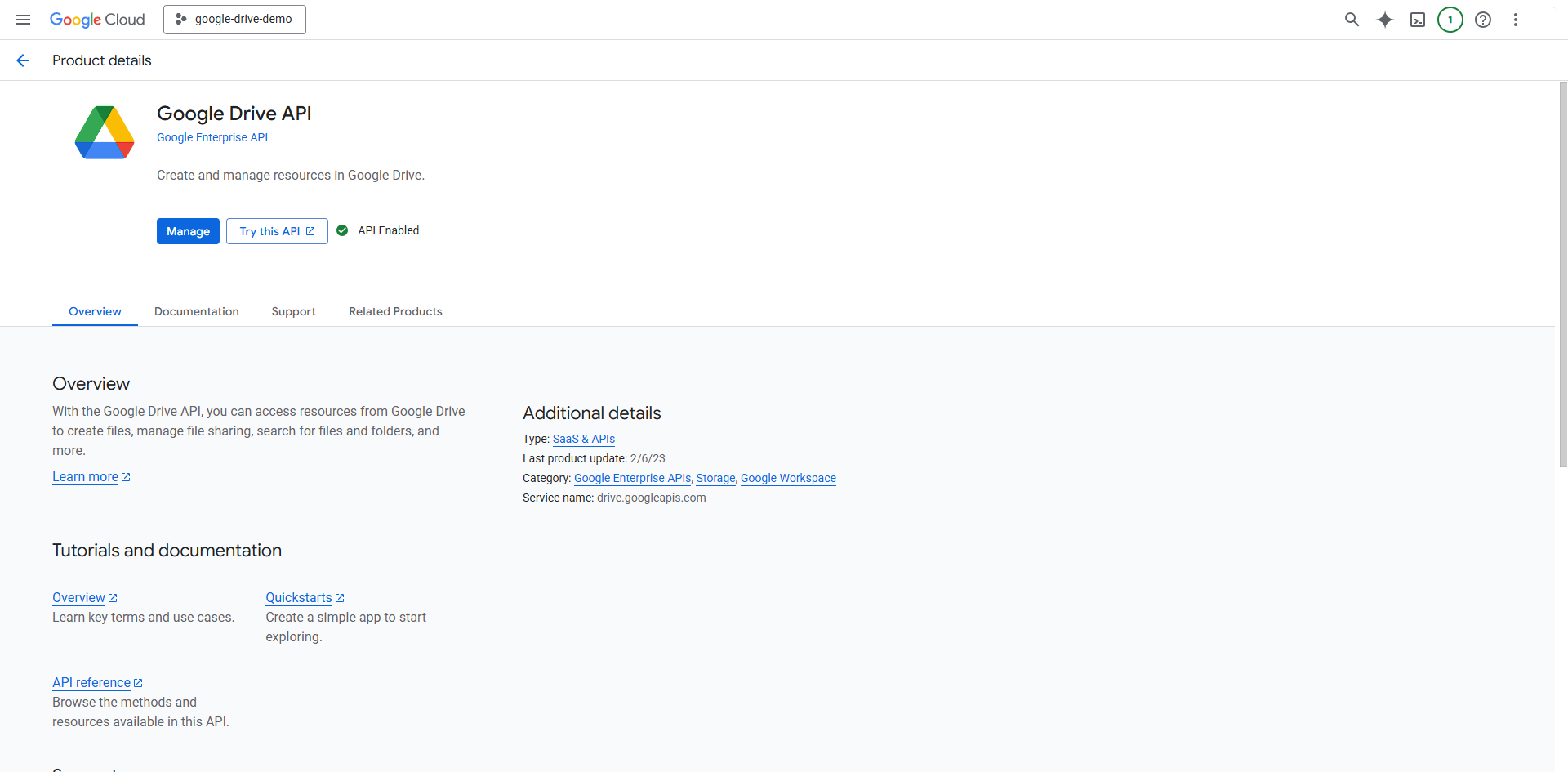

Once your project is created, make sure it's selected in the top navigation bar. In the left sidebar, click APIs & Services > Library. Search for "Google Drive API", click it, and then click Enable:

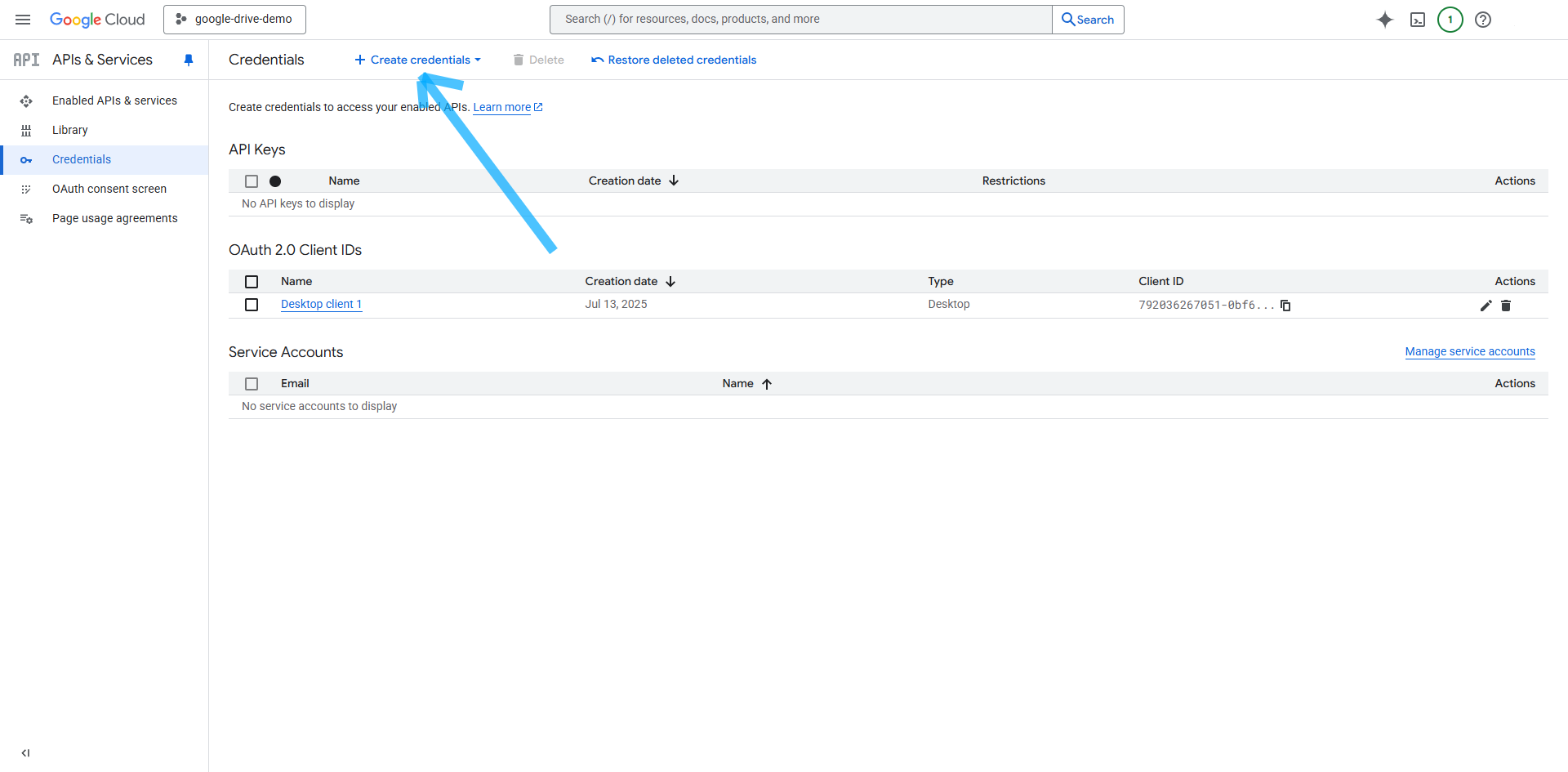

After enabling the API, you need to set up OAuth 2.0 credentials so your Python app can authenticate. In the sidebar, go to APIs & Services > Credentials > + Create credentials and choose OAuth client ID. If this is a new project, you will be prompted to provide an app name and support email:

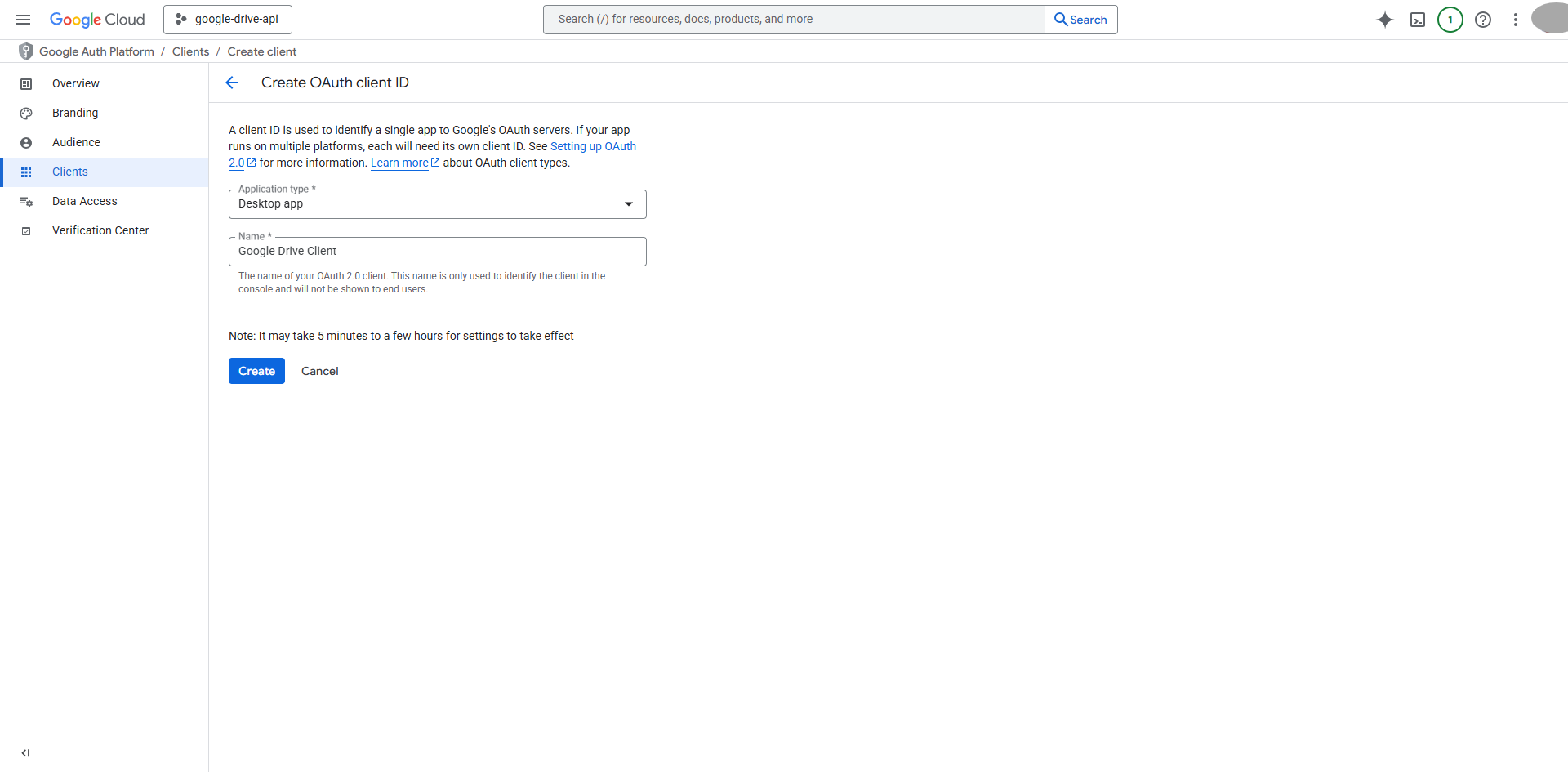

For the application type, select Desktop app, give it a name, and click Create. Download the client secret json file and save it as credentials.json in your project directory. This file is required for authentication in your Python code:

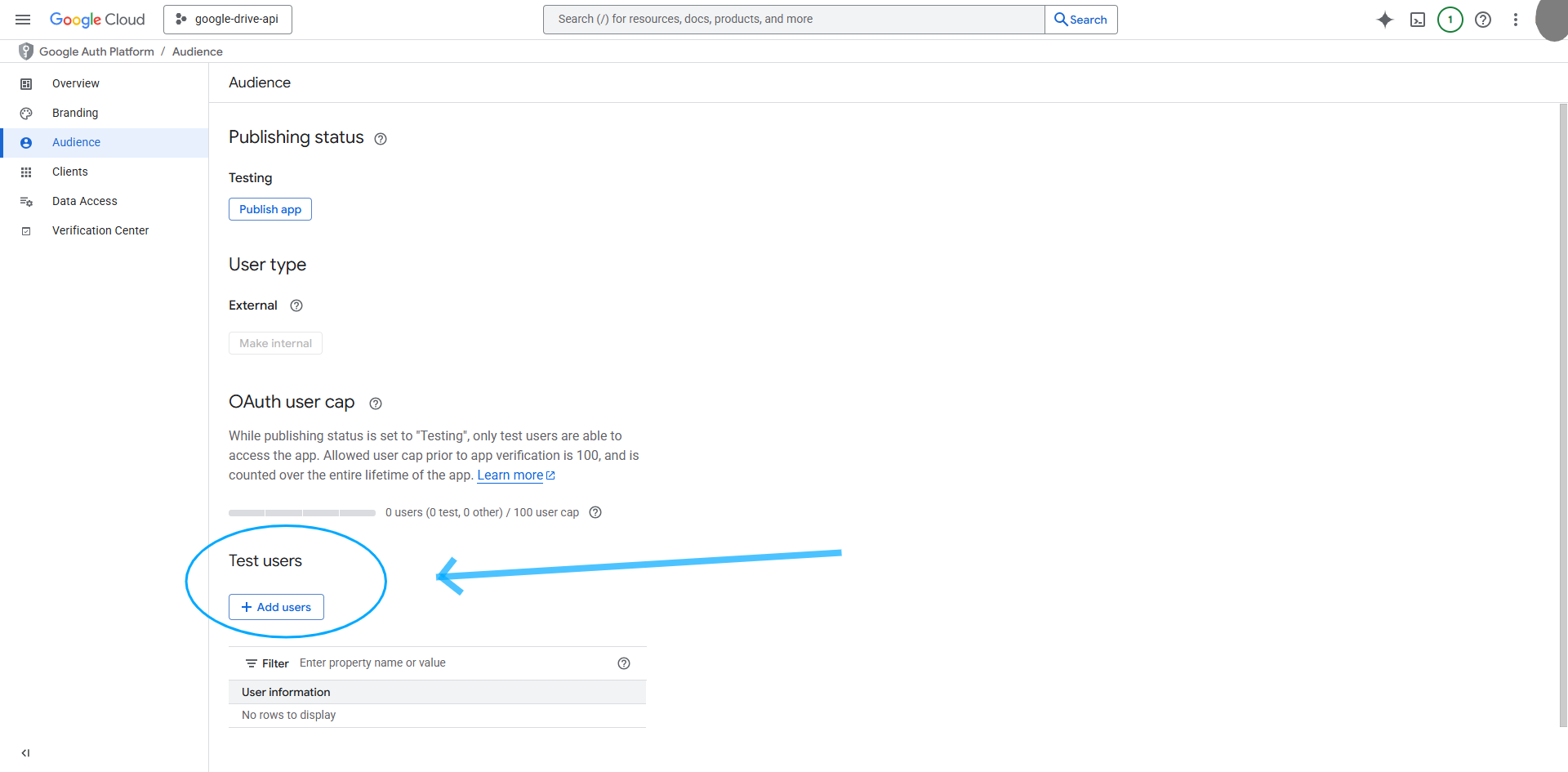

Lastly, navigate to the Audience section and make sure to add your email address as a test user; otherwise, you will encounter an error during the OAuth flow:

For more detailed, step-by-step instructions, refer to the Google Drive API Python Quickstart guide.

Install and authenticate with Google Drive API in Python

To integrate with Google Drive, you need to install the official Google API client library for Python:

Consider using a virtual environment to isolate your project dependencies:

This approach prevents conflicts with other Python projects and makes dependency management cleaner.

Related: How to get your Google Drive API key

Set up authentication

Before your Python application can access Google Drive on behalf of a user, it must be properly authenticated. Google supports several authentication methods for its APIs, but the most common include the following:

- API key: This is a simple method, but it's only suitable for accessing public data and not recommended for Google Drive, which contains private files

- Service account: This is used for server-to-server interactions, typically when your app needs to access files owned by a Google Workspace domain, not individual user files

- OAuth 2.0 Client ID: This is the standard for user-facing applications. This allows your app to request permission from a user to access their Google Drive files, and this is the most secure and flexible approach for most integrations

In this guide, you'll use OAuth 2.0 with a desktop app client type. This method is ideal for local development, scripts, and desktop applications because it allows users to explicitly grant your app access to their Drive data. The process involves the following:

1. Scopes: You specify which parts of a user's Drive your app needs to access (eg read-only access, full access, or partial access to only the files your app creates).

2. User consent: The user is prompted in their browser to approve the requested permissions.

3. Token management: After consent, your app receives access and refresh tokens. The access token is used for API calls, while the refresh token allows your app to obtain new access tokens without further user interaction.

Following is a code snippet that implements this flow, manages tokens securely, and ensures your app always has valid credentials. Save this code to a file named drive_service.py as you'll use it in later examples:

This code manages several key parts of authenticating with Google Drive:

- Scope selection strategy: The <code class="blog_inline-code">SCOPES</code> list includes multiple permission levels, but you should choose the minimum required for your application.

The <code class="blog_inline-code">drive.file</code> scope is particularly powerful for most business applications—it allows access only to files your app creates or that users explicitly open with your app. It's ideal for security-conscious environments.

- Token management: The function saves authentication tokens to <code class=="blog_inline-code">token.json</code> and automatically loads them on subsequent runs. This prevents users from repeatedly going through the OAuth flow, which would be frustrating in a production application

- Automatic refresh logic: This is critical for production applications. Google access tokens expire after one hour, but refresh tokens can be used to obtain new access tokens without user interaction. This automatic refresh prevents your application from breaking when tokens expire, a common source of production failures.

The OAuth flow opens a browser window where users grant permissions and then redirects to a local server to capture the authorization code. This approach works well for desktop applications and development environments.

List files with intelligent querying

When working with files stored in Google Drive, you often need to list the files for users to see them, search through them, or process files in bulk. Google Drive's API gives you the <code class="blog_inline-code">files() .list()</code> method to do exactly that.

When working with large Drives, results are paginated: the API returns a limited set of files per request, along with a <code class="blog_inline-code">nextPageToken</code> if more files remain. By repeatedly calling the API with this token, you can retrieve all files, no matter how many there are.

Create another file and name it <code class="blog_inline-code">list_all_files.py</code>.

Add the following code:

This function lists all files in your Google Drive, printing each file's name, ID, and MIME type. It's designed for both clarity and efficiency: by specifying <code class="blog_inline-code">fields=nextPageToken, files(id, name, mimeType)"</code>, the code fetches only the information you actually need. This makes the response much faster than the default (which includes a lot of unnecessary metadata). The function also handles pagination using the pageToke parameter so it keeps requesting more files until very file in your Drive has been listed. This is essential for Drives with hundreds or thousands of files.

You can easily adapt this function to search for specific file types, dates, or folders by adding a q parameter to your API call. For example, you could set <code class="blog_inline-code">q="fields="nextPageToken</code> to find only PDFs or use <code class="blog_inline-code">q="modifiedTime > '2023-01-01T00:00:00'"</code> to find files modified after a certain date. You can even combine conditions, such as searching for JPEG images modified after a specific date, or restrict your search to a particular folder by using the folder's ID.

To run this code, make sure you've already set up authentication, save the function to a file named <code class="blog_inline-code">list_all_files.py</code>, and run it with Python using <code class="blog_inline-code">python list_all_files.py</code>. The script prints out the details of every file it finds, and you can modify the query logic as needed for your use case.

Related: How to use the OneDrive API

Download files with resumable protocol

Now that you can list files, let's download them. To download a file, you need to specify a file ID and then use the <code class="blog_inline-code">service.files.get_media()</code> method.

The following is a snippet you can use to download files from Google Drive:

This code uses <code class="blog_inline-code">MediaIoBaseDownload</code> to handle file downloads with Google's resumable download protocol. If your network connection drops or the server has a temporary issue, the download picks up where it left off instead of starting over. This is particularly important for large files where restarting wastes time and bandwidth.

The function downloads files in chunks and shows progress updates, which prevents your program from running out of memory when handling big files. One important thing to note is that this approach works for regular files, but Google Workspace documents like Docs, Sheets, and Slides are different—they don't exist as traditional files, so they initially need to be exported to a specific format using service.files().export_media(fileId=file_id, mimeType='application/pdf' instead of the regular download method.

To run this code, save this snippet in a file as <code class="blog_inline-code">download_file.py</code>, set your file_id and destination variables, and run it with <code class="blog_inline-code">python download_file.py</code>. This downloads the file to your local machine.

Upload files with resumable transfers

To upload files to Google Drive, use the files() .create() method along with MediaFileUpload to handle the actual file transfer. The following snippet uploads these files:

This code handles file uploads to Google Drive with some features that make it work reliably:

- The <code class="blog_inline-code">resumable=True</code> parameter ensures that if your internet connection drops while uploading a large file, the upload will continue from where it left off instead of starting over completely

- The <code class="blog_inline-code">file_metadata</code> dictionary lets you set basic information about the file, like its name and which folder it should go into. You can also add descriptions or other properties if needed

- The <code class="blog_inline-code">fields='id'</code> parameter tells Google to send back only the file ID after the upload finishes, which makes the response faster since you don't need all the extra file details right away

To run this code, save the snippet in a file called <code class="blog_inline-code">upload_file.py</code>, set your local_path and drive_folder_id variables, and run it with <code class="blog_inline-code">python upload_file.py</code>.

See the file uploaded to your Google Drive and get back the file ID when it's done:

All the code for this tutorial is available in this GitHub repo.

Examples of using the Google Drive API

Integrating with the Google Drive API opens up several ways to automate tasks and manage data. Here are three practical examples:

Automate weekly CSV imports

Many teams rely on stakeholders to regularly drop CSV files (eg sales data, inventory updates, or partner reports) into shared Drive folders. With the Drive API, you can build Python scripts that automatically detect new CSVs, ingest their contents, and trigger downstream workflows (like database updates or analytics jobs). This approach eliminates manual intervention, reduces the risk of human error, and enables near real-time data integration.

For robust solutions, track processed file IDs or timestamps to avoid duplicate ingestion and use in-memory processing (e.g., io.BytesIO) to minimize disk I/O and improve security.

Generate enterprise reporting and analytics

The Drive API helps you programmatically extract and aggregate file metadata (such as creation dates, last modified times, file types, and sharing permissions) across your organization's Drive. By analyzing this metadata, you can uncover trends in document activity, identify underutilized or orphaned files, and monitor compliance with data governance policies. For example, you can build dashboards that visualize document activity over time or automatically flag files with overly broad sharing settings.

Manage machine learning and AI data pipelines

Google Drive is a natural fit for managing data sets, model artifacts, and experiment results in machine learning (ML) workflows. The API lets you automate the collection, versioning, and distribution of training data, ensuring that ML models always have access to the latest, curated data sets. This is especially helpful for teams working across multiple environments (development, staging, production) as you can enforce consistent data access and automate the movement of large files.

You can also use the Google Drive integration to support key product features and functionality. Here are just a few examples:

Power enterprise AI search

Say you offer a product that lets users find information based on the company documents they have access to.

To help power this product, you can connect with customers' instances of Google Drive, continually ingest their documents (e.g., every 24 hours) and then leverage a large language model (LLM) to understand the users' inputs, and use retrieval-augment generation (RAG) to provide accurate and clear answers.

For example, Guru, an enterprise AI search product, integrates with customers' Google Drive instances to answer questions like below.

Automate e-signature workflows

Say you offer an e-signature platform that helps users manage the end-to-end process of executing key contracts, from new hire offer letters to contractor NDAs.

To help your product automate the tedious parts of these workflows, you can integrate with customers' instances of Google Drive and support the following flows: Any time a customer wants to send a new contract, they can access the latest template within your product; and any time a contract is fully executed, it's automatically uploaded to the appropriate folder in Google Drive.

Best practices for integrating with the Google Drive API

When integrating Google Drive in production environments, you should focus on efficiency, security, and scalability. The following are some techniques to help you do just that:

Apply incremental data loading

In production, you can't afford to process every file every time—you need to focus on just the new or changed ones. This incremental loading pattern is especially important for production systems that process large volumes of files. Instead of downloading everything, you grab only what's actually changed.

You can use query parameters and filtering options to reduce the amount of data you have to process from the Google Drive API.

Use caching and temporary storage

Repeatedly requesting the same files from the Google Drive API can slow down your application and hit rate limits. To optimize performance, implement caching for frequently accessed files. By storing these files temporarily on the disk, you can reduce latency and avoid excessive API calls. Be sure to include cache invalidation strategies that update cached files when they are modified or after set intervals.

Perform error handling and logging

In production systems, robust error handling is crucial. You need to implement retry logic with exponential backoff for rate limits, handle temporary server errors gracefully, and log failures. The most common failure modes include rate limiting, temporary server errors, and permanent resource errors.

Follow security and compliance best practices

When dealing with sensitive files, security is a major concern. Make sure you don't have files with overly broad sharing permissions that violate your organization's security policies.

The Google Drive API's permissions <code class="blog_inline-code">type</code> field reveals different access patterns: <code class="<code class="blog_inline-code">user</code> for individual access, <code class="blog_inline-code">group</code> for group-based access, <code class="blog_inline-code">domain</code> for domain-wide access, and anyone for public access. Understanding these patterns helps you identify security risks and optimize access control strategies.

When handling credentials, always load secrets from environment variables or secure vault systems; never hard-code them in the source code. Implement automated credential rotation every thirty to ninety days and use service accounts with minimal required scopes rather than requesting broad https://www.googleapis.com/auth/drive access.

Set up audit trails for all critical API interactions (eg file creation and deletion), monitor unusual access patterns, and implement data loss prevention policies that scan for sensitive information before processing. For compliance frameworks like System and Organization Controls (SOC) 2 type 2, Health Insurance Portability and Accountability Act (HIPAA), or General Data Protection Regulation (GDPR), you need to validate data residency requirements and maintain detailed processing logs for audits.

Implement performance tuning

For high-volume file transfers, consider optimization strategies, like chunked transfers for multi-GB files, parallel processing with careful attention to rate limits, and caching of frequently accessed files. Test under real-world loads, tune based on your specific use case, and monitor for performance regressions.

Simplify file storage integrations with Merge's Unified API

The process of integrating with Google Drive is, clearly, complex. And maintaining this integration is even more resource and time intensive for your developers, especially since this work is never ending.

To help you connect your product with Google Drive—and any other file storage solution—you can simply build to Merge's File Storage Unified API.

Learn how Merge supports file storage integrations for leading enterprise companies like Mistral AI, Foxit, and Lattice, and how it can support your product's integration use cases by scheduling a demo with one of our integration experts.

.png)

.png)