6 Model Context Protocol alternatives to consider in 2026

.jpg)

The Model Context Protocol (MCP) standardizes how AI agents connect to tools. And since Anthropic introduced MCP in November 2024, rapid adoption by Microsoft, OpenAI, and Google has positioned MCP as the leading standard for AI tool integration.

However, MCP's security problems are concerning. For example, Equixly's security assessment found command injection vulnerabilities in 43 percent of tested MCP implementations, with another 30 percent vulnerable to server-side request forgery (SSRF) attacks and 22 percent allowing arbitrary file access.

To help you integrate AI agents with 3rd-party applications successfully and securely, we’ll then review six MCP alternatives through a three-part comparison framework.

{{this-blog-only-cta}}

Work with Apps

OpenAI’s Work with Apps uses macOS Accessibility APIs to read content from active applications and automatically inject it into ChatGPT prompts. The system monitors supported applications (like Visual Studio Code, Xcode, Terminal, and Apple Notes) and extracts the last 200 lines of visible content when you send a ChatGPT request.

Top use cases

Work with Apps is great for debugging code without copy-pasting, getting suggestions for the file you're currently editing, and asking questions about Terminal output or log files. It also eliminates context-switching during development workflows.

Limitations

Works with Apps works only with macOS and has limited application support. All extracted content goes to OpenAI servers, and there's no control over what data gets transmitted. Performance depends on macOS accessibility API responsiveness.

Relation to MCP

Work with Apps is application-specific context injection, not a protocol for tool integration. It complements rather than competes with MCP since it handles local context while MCP handles external tool execution.

Related: The top benefits of the Model Context Protocol

Microsoft Semantic Kernel

Semantic Kernel is Microsoft's .NET/Python SDK for building AI agents with programmatic memory management. The core architecture uses ChatHistory objects to store conversation state and ChatHistoryReducer components to manage context window limits. When your conversation approaches token limits, reducers automatically compress older messages while preserving system prompts and recent context. The ChatHistorySummarizationReducer condenses early conversation turns into summaries, while ChatHistoryTruncationReducer drops old messages:

Top use cases

Semantic Kernel is useful when you're building conversational agents that need sophisticated memory management or when you're integrating AI into existing .NET/Python applications. It's particularly useful for scenarios requiring programmatic control over conversation flow, especially when you need custom business logic built into your agent's decision-making process.

Limitations

Semantic Kernel's framework requires more development work than configuration-based solutions, often resulting in longer time-to-market. Security is also fully your team's responsibility as the framework doesn't provide built-in authentication or authorization. Performance depends on the complexity of your agent, and the learning curve can be steep, especially for teams unfamiliar with the Microsoft ecosystem.

Relation to MCP

Semantic Kernel is an agent development framework, not a protocol. It can consume MCP servers as plugins, combining Semantic Kernel's memory management with MCP's standardized tool access. This lets you build sophisticated agents using the Semantic Kernel while leveraging the broader MCP ecosystem for tool connectivity.

Related: A guide on MCP server best practices

LangChain and LangGraph

LangChain provides modular components for building large language model (LLM) applications: tools (functions the AI can call), memory (conversation storage), and chains (sequences of operations). LangGraph extends this with graph-based agent flows that support cycles, conditional logic, and persistent state:

Top use cases

If you're building conversational agents that need complex reasoning workflows or autonomous systems that require error recovery and replanning, you'll want to use LangChain. It's particularly useful if you need extensive third-party integrations in your applications.

LangGraph works well when you want deterministic control over how your agents behave rather than relying solely on what the LLM decides to do.

Limitations

Security requires explicit implementation at every integration point. Complex chains can suffer performance issues due to Python execution overhead and sequential processing bottlenecks.

The learning curve is significant given the framework's many components and abstractions. Additionally, frequent API changes in the ecosystem create maintenance overhead.

Relation to MCP

LangChain and LangGraph are development frameworks that can integrate with MCP servers through adapters, treating them as tools in their ecosystem. This creates a hybrid approach where LangGraph provides agent orchestration while MCP servers handle standardized business integrations. This combination gives you sophisticated workflow control plus access to the growing MCP ecosystem.

Google Vertex AI

Vertex AI Agent Builder addresses MCP's enterprise deployment challenges through managed infrastructure that eliminates the operational complexity you'd face running agents at scale. Google handles the infrastructure headaches, like scaling, container management, and session persistence while providing built-in MCP support. This lets your agents connect to external data sources and tools while staying within Google Cloud's security and governance framework:

Top use cases

Vertex AI is a great choice when you need integration with Google services (ie BigQuery, Looker, Workspace) and want enterprise-grade controls, such as audit logging, permissions based on identity and access management (IAM), and hermetic execution environments. The platform is ideal for organizations that are already invested in Google Cloud and need rapid agent deployment without custom infrastructure work.

Limitations

Vertex provides automatic scaling, comprehensive monitoring, and production-ready security controls. However, it comes with trade-offs, including vendor lock-in to Google Cloud, limited regional availability, and added complexity when integrating with non-Google systems.

Relation to MCP

Vertex AI can host MCP-compliant agents while adding enterprise security layers that address common MCP vulnerabilities, like inadequate authentication and audit trails. This integration offers a pathway to taking advantage of the growing MCP ecosystem while maintaining enterprise security requirements through Google's managed infrastructure.

Related: How to build an MCP server from scratch

Cap'n Proto

Cap'n Proto addresses MCP's performance bottlenecks by eliminating JSON-RPC serialization overhead. Instead of parsing and reconstructing data, it uses zero-copy serialization where data is laid out in memory exactly how it is transmitted. The RPC system implements capability-based security where possessing an object reference automatically grants permission to use it, eliminating authentication handshakes.

Top use cases

You want Cap'n Proto for interprocess communication and high-speed networking where microsecond latencies matter more than developer convenience. Cloudflare uses it extensively in Workers for isolated communication because it handles millions of requests without serialization bottlenecks.

Limitations

Cap'n Proto doesn't address higher-level AI-tool interaction logic, which means you still need to build the agent reasoning, tool selection, and error handling that MCP provides at the application layer.

Relation to MCP

Cap'n Proto operates at a fundamentally different level than MCP since it focuses on efficient data exchange rather than AI-tool integration patterns. You could theoretically replace MCP's JSON-RPC transport with Cap'n Proto while keeping MCP's tool/resource/prompt abstractions intact. This would give you MCP's standardized interface with much lower message-passing overhead, potentially solving the performance issues that emerge when agents make hundreds of tool calls in complex workflows.

Merge MCP

Merge MCP operates as a managed MCP server that eliminates the need to build individual servers for each business system integration.

The underlying architecture of Merge uses scope-based security where tools are dynamically generated based on your Merge API schema and your authorized permissions.

When you configure the server with scopes like <code class="blog_inline-code">ats.Job:read</code> or <code class="blog_inline-code">crm.Contact:write</code>, Merge validates these against your Linked Account capabilities and enables tools only for valid, authorized access patterns.

The server connects through your Merge API key and Linked Account token, with tools appearing in your MCP client within minutes of configuration.

Instead of building separate MCP servers for each business system, you get one Merge MCP server handling applications within CRM, ticketing, file storage, accounting, and other categories.

Top use cases

Merge excels when enterprises need reliable customer-facing integrations at scale without building custom MCP servers for each integration.

Merge also provides enterprise-grade authentication, data encryption, and audit trails that address security vulnerabilities.

Merge is also ideal for organizations that want advanced data syncing features, like Field Mapping, which enables you to map custom fields from platforms like Hubspot to new common model fields in the Merge Unified API.

Limitations

While Merge MCP provides enhanced security and managed infrastructure, it requires MCP-compatible clients and focuses specifically on business system integrations rather than broader agent development capabilities. This means you still need to handle agent orchestration logic separately since Merge provides the data integration layer but not the AI reasoning and decision-making components.

Relation to MCP

The Merge MCP server represents an enhanced and secure implementation of the MCP itself, providing enterprise-grade authentication, data encryption, and trusted infrastructure with built-in privacy safeguards. Rather than replacing MCP, Merge strengthens it by adding enterprise security layers, managed infrastructure, and prebuilt integrations that eliminate the custom development work typically required for business system connectivity.

Best practices for deciding between MCP and alternative approaches

When choosing between MCP and alternative approaches, keep the following best practices in mind:

Assess security needs

Because security requirements cascade through every architectural decision, you need to assess them before choosing between MCP and alternative approaches. Enterprise data demands managed solutions that provide enterprise-grade authentication and encryption without the time and cost of building security infrastructure yourself.

The Merge MCP server addresses prompt injection vulnerabilities and authentication inconsistencies that plague standard MCP implementations. Vertex AI offers platform-level security with built-in compliance at the cost of integration flexibility.

Avoid raw MCP or self-managed setups for sensitive workloads unless you have dedicated security engineering resources.

Evaluate performance requirements

Performance needs constrain your architectural choices, so assess them upfront.

Cap'n Proto's zero-copy design can significantly outperform JSON-RPC in numeric-heavy scenarios, though relative performance varies greatly depending on data characteristics. Standard MCP implementations add network and serialization overhead that becomes problematic for high-frequency applications. Most business use cases can absorb this performance cost for MCP's standardization benefits. Managed platforms introduce more latency, but reliability guarantees often justify the trade-off for enterprise deployments.

Consider integration complexity

Integration complexity scales exponentially with the number of tools your agents need to access.

Instead of building custom connectors for each API, you get one protocol that works across multiple business systems. Building custom MCP servers, like this GitHub, Slack, and Merge workflow implementation demo, shows why Merge's unified approach works for complex integrations. If you need only a few specific integrations or have specialized requirements, custom LangChain implementations can be more efficient.

Evaluate the ecosystem and control

Match your platform needs to your control requirements. If you're already invested in Google Cloud and want comprehensive MLOps capabilities with minimal engineering overhead, Vertex AI provides the most seamless path forward. When you need maximum control over agent behavior or custom architectures, SDKs, like LangChain or Semantic Kernel, give you the flexibility to implement exactly what you envision. For simple automation without complex orchestration, OpenAI Work with Apps handles the basics.

Ready to integrate your AI agent with 3rd-party apps?

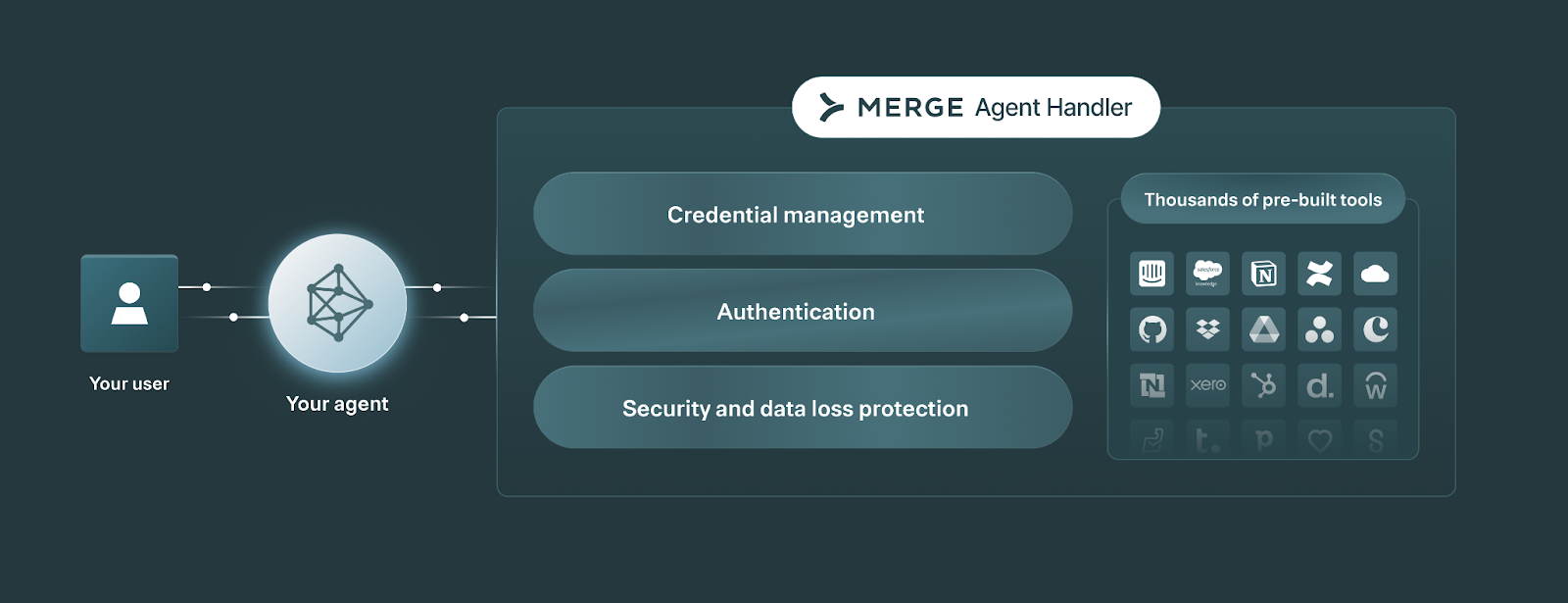

Merge Agent Handler offers a single platform to securely connect your AI agents to more than a thousand tools for dozens of pre-built connectors (you can also auto-generate countless more connectors!).

Merge Agent Handler also offers the features and functionality you need to monitor and manage your agents’ integrations, from customizable alerts to fully-searchable logs to audit trails.

Start testing Merge Agent Handler today by signing up for a free account!

.png)

.png)